Deep Learning with Python

The code examples use the Python deep-learning framework Keras, with Tensor- Flow as a backend engine. Keras, one of the most popular and fastest-growing deep- learning frameworks, is widely recommended as the best tool to get started with deep learning.

PART 1 Fundamental of Deep Learning

1 What is deep learning?

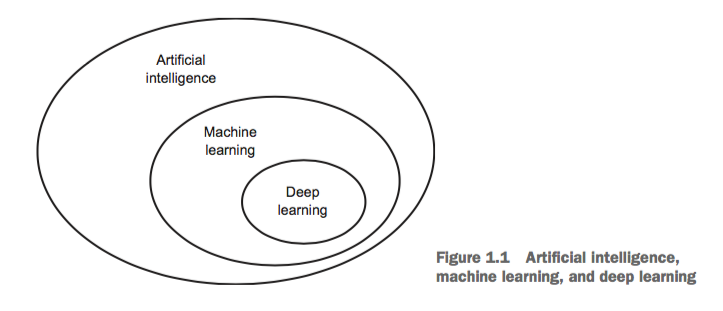

1.1 Artificial intelligence, machine learning, and deep learning

1.1.2 Machine learning

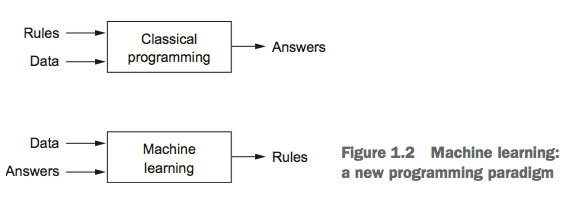

With machine learning, humans input data as well as the answers expected from the data, and out come the rules. These rules can then be applied to new data to produce original answers.

A machine-learning system is trained rather than explicitly programmed.

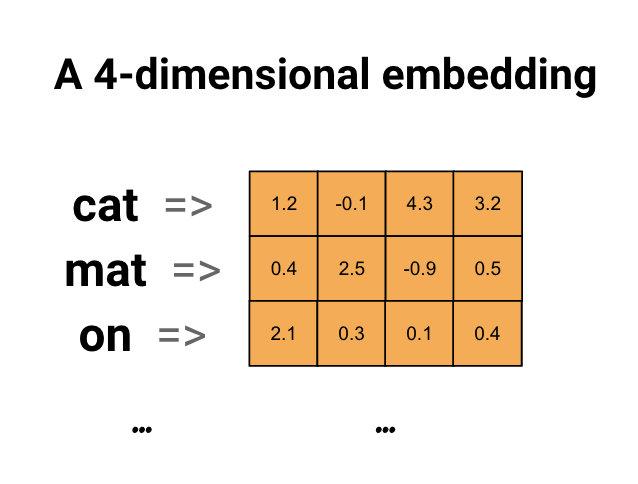

1.1.3 Learning representations from data

To do machine learning, we need three things:- Input data

- Examples of the expected output for the input data

- A way to measure whether the algorithm is doing a good job The measurement is used as a feedback signal to adjust the way the algorithm works. This adjustment step is what we call learning.

1.1.4 The “deep” in deep learning

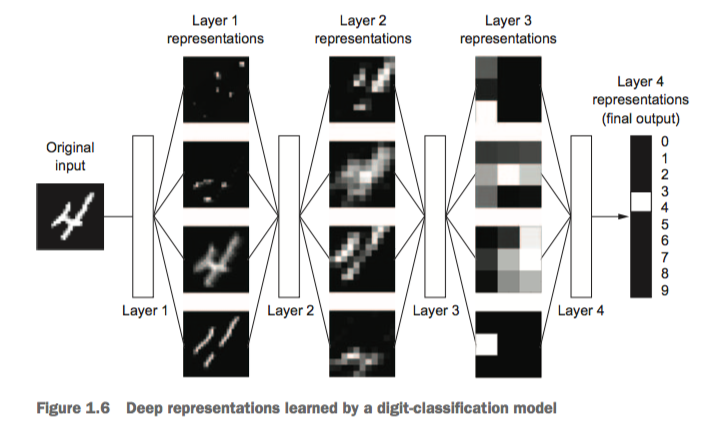

The deep in deep learning stands for this idea of successive layers of representations. How many layers contribute to a model of the data is called the depth of the model.

1.1.5 Understanding how deep learning works, in three figures

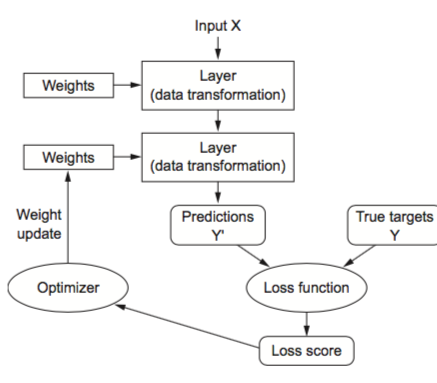

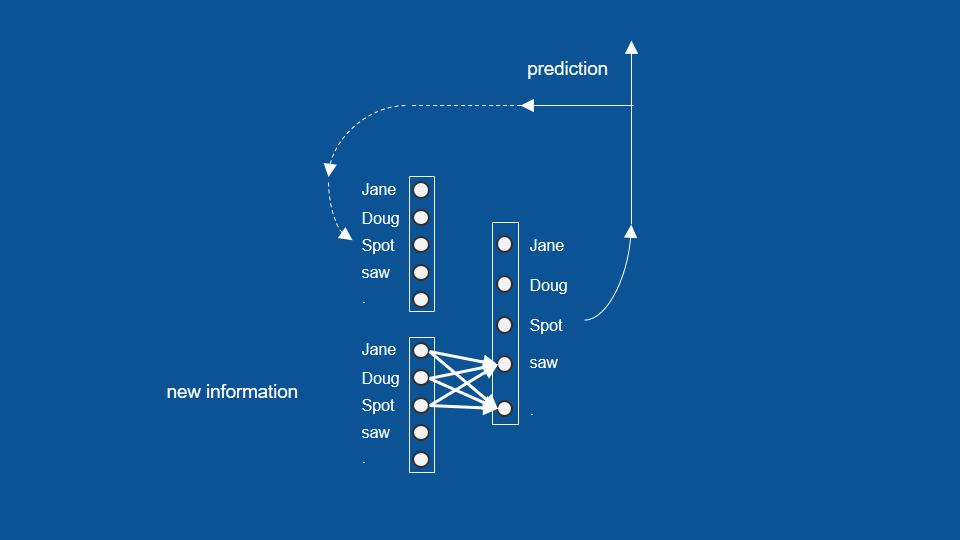

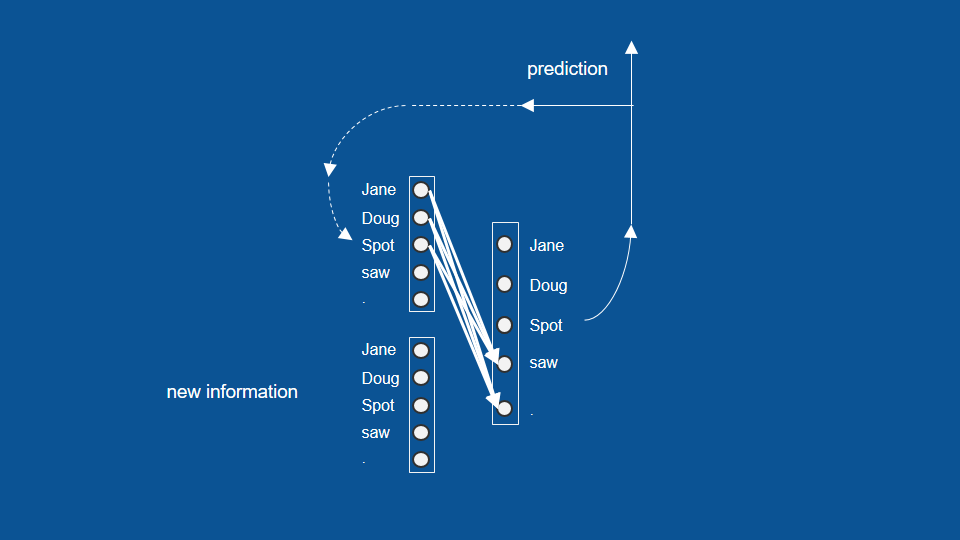

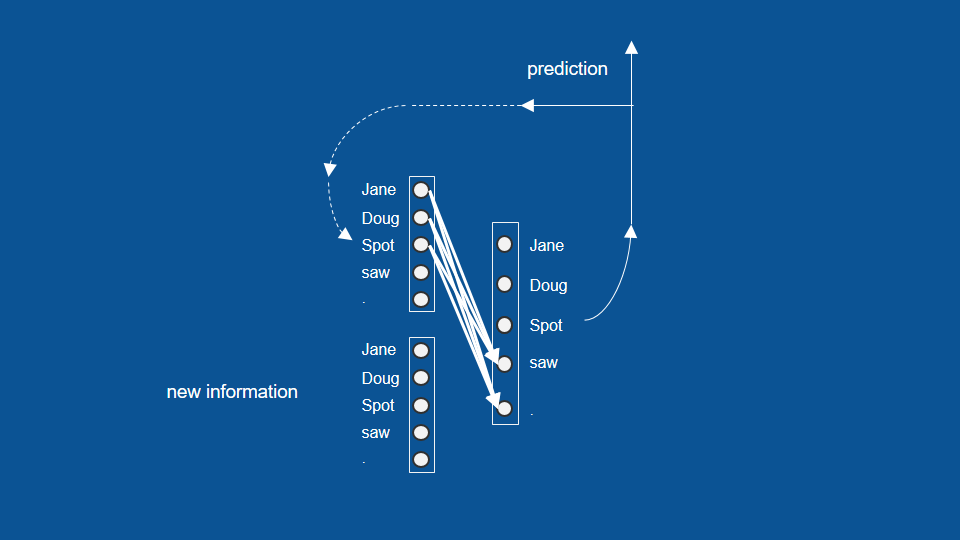

The machine learning is about mapping inputs (such as images) to targets (such as the label “cat”), this input-to-target mapping via a deep sequence of simple data transformations (layers) and that these data transformations are learned by exposure to examples.

What a layer to do about its input data is stored in the layer’s weights, the transformation implemented by a layer is parameterized by its weights.

In this context, learning means finding a set of values for the weights of all layers in a network, such that the network will correctly map example inputs to their associated targets.

The loss function(objective function) is to measure how far (score) this output is from what you expected.

The fundamental trick in deep learning is to use this score as a feedback signal to adjust the value of the weights a little, in a direction that will lower the loss score for the current sample. This adjustment is the job of the optimizer, which implements what’s called the Backpropagation algorithm: the central algorithm in deep learning.

Initially, the weights of the network are assigned random values, with every sample the network processes, the weights are adjusted a little in the correct direction, and the loss score decreases. This is the training loop, repeated a sufficient number of times, yields weight values that minimize the loss function.

1.2 Before deep learning: a brief history of machine learning

Most of the machine-learning algorithms used in the industry today aren’t deep-learning algorithms, deep learning isn’t always the right tool for the job — sometimes there isn’t enough data for deep learning to be applicable, and sometimes the problem is better solved by a different algorithm.1.2.1 Probabilistic modeling

1.2.2 Early neural networks

1.2.3 Kernel methods

Kernel methods are a group of classification algorithms, the best known of which is the support vector machine (SVM).SVMs aim at solving classification problems by finding good decision boundaries between two sets of points belonging to two different categories.

Decision trees, random forests, and gradient boosting machines

Decision trees are flowchart-like structures that let you classify input data points or pre- dict output values given inputs1.2.5 Back to neural networks

Since 2012, deep convolutional neural networks (convnets) have become the go-to algorithm for all computer vision tasks1.2.6 What makes deep learning different

The primary reason deep learning took off so quickly is that it offered better performance on many problems.1.2.7 The modern machine-learning landscape

A great way to get a sense of the current landscape of machine-learning algorithms and tools is to look at machine-learning competitions on Kaggle. Kaggle is a platform for predictive modelling and analytics competitions in which statisticians and data miners compete to produce the best models for predicting and describing the datasets uploaded by companies and users.1.3 Why deep learning? Why now?

The two key ideas of deep learning for computer vision — convolutional neural networks and backpropagation — were already well understood in 1989. So why did deep learning only take off after 2012?

The real bottlenecks throughout the 1990s and 2000s were data and hardware.

1.3.1 Hardware

- NVIDIA launched CUDA (https://developer.nvidia.com/about-cuda), a programming interface for its line of GPUs.

- Google revealed its tensor processing unit (TPU) project: a new chip design developed from the ground up to run deep neural networks, which is reportedly 10 times faster and far more energy efficient than top-of-the-line GPUs.

1.3.2 Data

If deep learning is the steam engine of this revolution, then data is its coal: the raw material that powers our intelligent machines, without which nothing would be possible.If there’s one dataset that has been a catalyst for the rise of deep learning, it’s the ImageNet dataset, consisting of 1.4 million images that have been hand annotated with 1,000 image categories (1 category per image).

1.3.3 Algorithms

The key issue was that of gradient propagation through deep stacks of layers. The feedback signal used to train neural networks would fade away as the number of layers increased.2 Before we begin: the mathematical building blocks of neural networks

2.1 A first look at a neural network

In machine learning, a category in a classification problem is called a class. Data points are called samples. The class associated with a specific sample is called a label.

The problem we’re trying to solve here is to classify grayscale images of handwritten digits (28 × 28 pixels) into their 10 categories (0 through 9). We’ll use the MNIST dataset assembled by the National Institute of Standards and Technology (the NIST in MNIST):

- a set of 60,000 training images

- a set of 10,000 test images

- train_images and train_labels form the training set

>>> train_images.shape

(60000, 28, 28)

>>> len(train_labels)

60000

>>> train_labels

array([5, 0, 4, ..., 5, 6, 8], dtype=uint8)

>>> test_images.shape

(10000, 28, 28)

>>> len(test_labels)

10000

>>> test_labels

array([7, 2, 1, ..., 4, 5, 6], dtype=uint8)

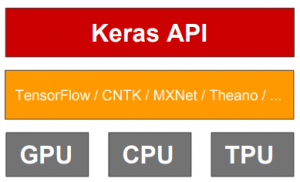

Keras is a high-level neural networks API, written in Python and capable of running on top of TensorFlow, CNTK, or Theano.

To set up Keras

pip install keras

pip install tensorflow

pip list | grep -i keras

pip list | grep -i tensorflow

>>> import tensorflow as tf

Illegal instruction (core dumped)

Breaking Changes Prebuilt binaries are now built against CUDA 9.0 and cuDNN 7. Prebuilt binaries will use AVX instructions. This may break TF on older CPUs.You have at least two options:

- Use tensorflow 1.5 or older You will miss out on new features, but most basic features and documentations are not that different.

- Build from source

Try to uninstall them then downgrade to a working version 1.5:

sudo pip3 uninstall Tensorflow

sudo pip install tensorflow==1.5

conda install -c anaconda tensorflow

conda install -c anaconda keras

conda install -c anaconda opencv # 3.4.2

# conda install -c conda-forge opencv=3.2.0 # 4.1.1

pip install opencv-python

pip install opencv-contrib-python

Here is an example of the flow:

- Loading the MNIST dataset in Keras MNIST database of handwritten digits is a dataset of 60,000 28x28 grayscale images of the 10 digits, along with a test set of 10,000 images.

- train_images, test_images: uint8 array of grayscale image data with shape (num_samples, 28, 28).

- train_labels, test_labels: uint8 array of digit labels (integers in range 0-9) with shape (num_samples,).

- Build the network architecture This network consists of a sequence of 2 Dense layers, which are densely connected (also called fully connected) neural layers.

- Pass an input_shape argument to the first layer.

- Some 2D layers, such as Dense, support the specification of their input shape via the argument input_dim

- If you ever need to specify a fixed batch size for your inputs (this is useful for stateful recurrent networks), you can pass a batch_size argument to a layer. If you pass both batch_size=32 and input_shape=(6, 8) to a layer, it will then expect every batch of inputs to have the batch shape (32, 6, 8).

- units is a positive integer which decides the dimensionality of the output space.

- activation is the element-wise activation function passed as the activation argument

- kernel is a weights matrix created by the layer

- bias is a bias vector created by the layer (only applicable if use_bias is True).

- The compilation step Before training a model, you need to configure the learning process, which is done via the compile method.

- An optimizer The mechanism through which the network will update itself based on the data it sees and its loss function. This could be the string identifier of an existing optimizer (such as rmsprop oradagrad), or an instance of the Optimizer class. See: optimizers.

- A loss function How the network will be able to measure its performance on the training data, and thus how it will be able to steer itself in the right direction. This is the objective that the model will try to minimize. It can be the string identifier of an existing loss function (such as categorical_crossentropy or mse), or it can be an objective function.

- A list of metrics Metrics to monitor during training and testing. For any classification problem you will want to set this to metrics=['accuracy']. A metric could be the string identifier of an existing metric or a custom metric function.

- Preparing the image data We transform it into a float32 array of shape (60000, 28 * 28) with values between 0 and 1.

- Preparing the labels

- y is a class vector(integers from 0 to num_classes) to be converted .

- num_classes total number of classes.

- dtype The data type expected by the input, as a string (float32, float64, int32...)

# 1 Loading the MNIST dataset in Keras

from keras.datasets import mnist

(train_images, train_labels), (test_images, test_labels) = mnist.load_data()

# 2 Build the network architecture

from keras import models

from keras import layers

network = models.Sequential()

network.add(layers.Dense(512, activation='relu', input_shape=(28 * 28,)))

network.add(layers.Dense(10, activation='softmax'))

output = activation( dot(input, kernel) + bias )

keras.layers.Dense(units, activation=None, use_bias=True, kernel_initializer='glorot_uniform', bias_initializer='zeros', kernel_regularizer=None, bias_regularizer=None, activity_regularizer=None, kernel_constraint=None, bias_constraint=None)

# 3 The compilation step

network.compile(optimizer='rmsprop',

loss='categorical_crossentropy',

metrics=['accuracy'])

#4 Preparing the image data

train_images = train_images.reshape((60000, 28 * 28))

train_images = train_images.astype('float32') / 255

test_images = test_images.reshape((10000, 28 * 28))

test_images = test_images.astype('float32') / 255

>>>

>>> x = np.array([1, 2, 2.5])

>>> x

array([1. , 2. , 2.5])

>>>

>>> x.astype(int)

array([1, 2, 2])

#5 Preparing the labels

from keras.utils import to_categorical

train_labels = to_categorical(train_labels)

test_labels = to_categorical(test_labels)

> labels

array([0, 2, 1, 2, 0])

# `to_categorical` converts this into a matrix with as many

# columns as there are classes. The number of rows

# stays the same.

> to_categorical(labels)

array([[ 1., 0., 0.],

[ 0., 0., 1.],

[ 0., 1., 0.],

[ 0., 0., 1.],

[ 1., 0., 0.]], dtype=float32)

使用summary指令review一下整個model:

network.summary()

Layer (type) Output Shape Param #

=================================================================

dense_1 (Dense) (None, 512) 401920

_________________________________________________________________

dense_2 (Dense) (None, 10) 5130

=================================================================

Total params: 407,050

Trainable params: 407,050

Non-trainable params: 0

- 401920 (28 x 28 + 1 ) x 512 = 401920

- 5130 (512 + 1 ) x 10 = 5130

Keras models are trained on Numpy arrays of input data and labels.

We’re now ready to train the network by calling the network’s fit method in Keras:

fit(self, x=None, y=None, batch_size=None, epochs=1, verbose=1, callbacks=None, validation_split=0.0, validation_data=None, shuffle=True, class_weight=None, sample_weight=None, initial_epoch=0, steps_per_epoch=None, validation_steps=None)

- x Numpy array of training data.

- y Numpy array of target (label) data.

- batch_size Integer or None. Number of samples per gradient update. If unspecified, it will default to 32.

- epochs Integer. Number of epochs to train the model. An epoch is an iteration over the entire input and target data provided. Note that in conjunction with initial_epoch, epochs is to be understood as "final epoch". The model is not trained for a number of iterations given by epochs, but merely until the epoch of index epochs is reached.

- shuffle Boolean (whether to shuffle the training data before each epoch) or str (for 'batch'). 'batch' is a special option for dealing with the limitations of HDF5 data; it shuffles in batch-sized chunks

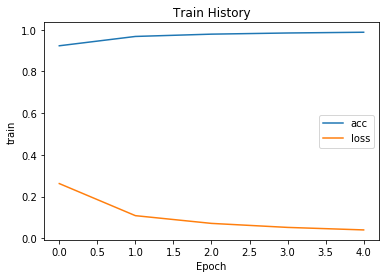

fit() returns a History object.

Its History.history attribute is a record of training loss values and metrics values at successive epochs, as well as validation loss values and validation metrics values (if applicable).

>>> train_history = network.fit(train_images, train_labels, epochs=5, batch_size=128)

Epoch 1/5

60000/60000 [==============================] - 7s 118us/step - loss: 0.2550 - acc: 0.9260

Epoch 2/5

60000/60000 [==============================] - 7s 109us/step - loss: 0.1028 - acc: 0.9697

Epoch 3/5

60000/60000 [==============================] - 7s 110us/step - loss: 0.0682 - acc: 0.9797

Epoch 4/5

60000/60000 [==============================] - 7s 111us/step - loss: 0.0501 - acc: 0.9851

Epoch 5/5

60000/60000 [==============================] - 7s 115us/step - loss: 0.0377 - acc: 0.9886

We quickly reach an accuracy of 0.9886 (98.9%) on the training data.

每訓練完一個週期,會計算此週期的accuracy與loss放到train_history.history這個dictionary object中。

{'acc': [0.99143333333333339,

0.99368333336512249,

0.99528333330154417,

0.99626666663487751,

0.99703333333333333],

'loss': [0.029321372731526692,

0.022051296432067949,

0.016661394806827108,

0.01325423946591715,

0.010353616964444519]}

import matplotlib.pyplot as plt

def show_train_history(train_history, label_1, label_2):

plt.plot(train_history.history[label_1])

plt.plot(train_history.history[label_2])

plt.title('Train History')

plt.ylabel('train')

plt.xlabel('Epoch')

plt.legend([label_1, label_2], loc='center right')

plt.show()

show_train_history(train_history, 'acc','loss')

現在,利用訓練好的模型來預測test dataset:

predicted_results = network.predict(test_images, verbose=1)

predicted_results[0]

array([ 3.35639416e-12, 4.17718540e-13, 9.10662337e-08,

1.63431287e-05, 1.50763562e-18, 1.97378971e-11,

1.31892617e-19, 9.99983549e-01, 4.16868137e-11,

2.15591989e-08], dtype=float32)

test_labels[0]

array([ 0., 0., 0., 0., 0., 0., 0., 1., 0., 0.])

最後,我們使用test dataset來評估模型的準確率:

evaluate(self, x=None, y=None, batch_size=None, verbose=1, sample_weight=None, steps=None)

- x input data, as a Numpy array or list of Numpy arrays (if the model has multiple inputs).

- y labels, as a Numpy array.

- batch_size Integer. If unspecified, it will default to 32.

>>> test_loss, test_acc = network.evaluate(test_images, test_labels)

>>> print('test_acc:', test_acc)

test_acc: 0.981

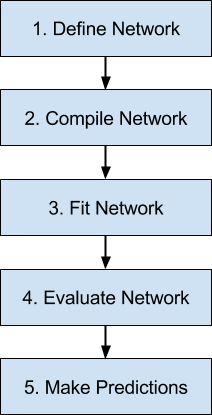

The 5 steps in the neural network model life-cycle in Keras:

2.2 Data representations for neural networks

In mathematics, tensors are geometric objects that describe linear relations between geometric vectors, scalars, and other tensors.In general, all current machine-learning systems use tensors as their basic data structure. A tensor is a container for data.

2.2.1 Scalars (0D tensors)

A tensor that contains only one number is called a scalar (or scalar tensor, or 0-dimensional tensor, or 0D tensor).The number of axes of a tensor is also called its rank.

2.2.2 Vectors (1D tensors)

An array of numbers is called a vector, or 1D tensor. A 1D tensor is said to have exactly one axis.2.2.3 Matrices (2D tensors)

An array of vectors is a matrix, or 2D tensor. A matrix has two axes (often referred to rows and columns).2.2.4 3D tensors and higher-dimensional tensors

If you pack such matrices in a new array, you obtain a 3D tensor. In deep learning, you’ll generally manipulate tensors that are 0D to 4D, although you may go up to 5D if you process video data.2.2.5 Key attributes

A tensor is defined by three key attributes:- Number of axes (rank) This is also called the tensor’s ndim in Python libraries such as Numpy.

- Shape This is a tuple of integers that describes how many dimensions the tensor has along each axis. A vector has a shape with a single element, such as (5,), whereas a scalar has an empty shape, ().

- Data type (usually called dtype in Python libraries)

print(train_images.ndim)

3

print(train_images.shape)

(60000, 28, 28)

print(train_images.ndim)

2

print(train_images.shape)

(60000, 784)

2.2.6 Manipulating tensors in Numpy

Selecting specific elements in a tensor is called tensor slicing.- Select image digits #10 to #100

>>> my_slice = train_images[10:100]

>>> print(my_slice.shape)

(90, 28, 28)

my_slice = train_images[:, 14:, 14:]

my_slice = train_images[:, 7:-7, 7:-7]

2.2.7 The notion of data batches

In general, the first axis (axis 0, because indexing starts at 0) in all data tensors you’ll come across in deep learning will be the samples axis.The deep-learning models don’t process an entire dataset at once; rather, they break the data into small batches. When considering such a batch tensor, the first axis (axis 0) is called the batch axis or batch dimension.

For the batch size of 128, the n-th batch samples in the MNIST dataset:

batch = train_images[128 * n:128 * (n + 1)]

2.2.8 Real-world examples of data tensors

The data you’ll manipulate will almost always fall into one of the following categories:- Vector data 2D tensors of shape (samples, features)

- Timeseries data or sequence data 3D tensors of shape (samples, timestamps, features)

- Images 4D tensors of shape(samples,height,width,channels) or (samples, channels, height, width)

- Video 5D tensors of shape (samples, frames, height, width, channels) or (samples, frames, channels, height, width)

2.2.9 Vector data

Examples:- Each person can be characterized as a vector of 3 feature values: age, ZIP code, and income An entire dataset of 100,000 people can be stored in a 2D tensor of shape (100000, 3).

- Each document can be characterize by the counts of how many times each word appears in it (out of a dictionary of 20,000 common words). An entire dataset of 500 documents can be stored in a tensor of shape (500, 20000).

| person#1 | age_1 | ZIP_1 | income_1 |

| person#2 | age_2 | ZIP_2 | income_2 |

| . | . | . | . |

| person#100000 | age_100000 | ZIP_100000 | income_100000 |

2.2.10 Timeseries data or sequence data

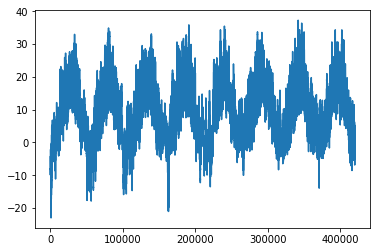

Whenever time matters in your data , it makes sense to store it in a 3D tensor with an explicit time axis. The time axis is always the second axis (axis of index 1), by convention.Examples:

- A day's stock price is recorded every minute: highest, lowest and the current price. An entire dataset of 6.5 hours trading time(390 minutes) for 250 days can be stored in a 3D tensor of shape(250, 390, 3).

2.2.11 Image data

Images typically have three dimensions: height, width, and color depth.- A batch of 128 grayscale images of size 256 × 256 could thus be stored in a tensor of shape (128, 256, 256, 1)

- a batch of 128 color images could be stored in a tensor of shape (128, 256, 256, 3)

2.2.12 Video data

Because each frame can be stored in a 3D tensor (height, width, color_depth), a sequence of frames can be stored in a 4D tensor (frames, height, width, color_depth), and thus a batch of different videos can be stored in a 5D tensor of shape (samples, frames, height, width, color_depth).2.3 The gears of neural networks: tensor operations

Element-wise operations

Element-wise operations are operations that are applied independently to each entry in the tensors.If you want to write a naive Python implementation of an element-wise operation, you use a for loop.

As ReLU (Rectified Linear Unit) function as an example: 輸入超過0, 輸出結果就是輸入; 輸入小於0就輸出0.

The naive Python implementation of an element-wise ReLu operation:

def naive_relu(x):

assert len(x.shape) # x is a 2D Numpy tensor.

x = x.copy() # Avoid overwriting the input

for i in range(x.shape[0]):

for j in range(x.shape[1]):

x[i, j] = max(x[i, j], 0)

return x

In practice, when dealing with Numpy arrays, these operations are available as well optimized built-in Numpy functions. So, in Numpy, you can do the following element-wise operation,

import numpy as np

z = x + y # Element-wise addition

z = np.maximum(z, 0.) # Element-wise function

2.3.2 Broadcasting

What happens with element-wise operations when the shapes of the two tensors are different?When possible, and if there’s no ambiguity, the smaller tensor will be broadcasted to match the shape of the larger tensor. Broadcasting consists of two steps:

- Axes (called broadcast axes) are added to the smaller tensor to match the ndim of the larger tensor.

- The smaller tensor is repeated alongside these new axes to match the full shape of the larger tensor.

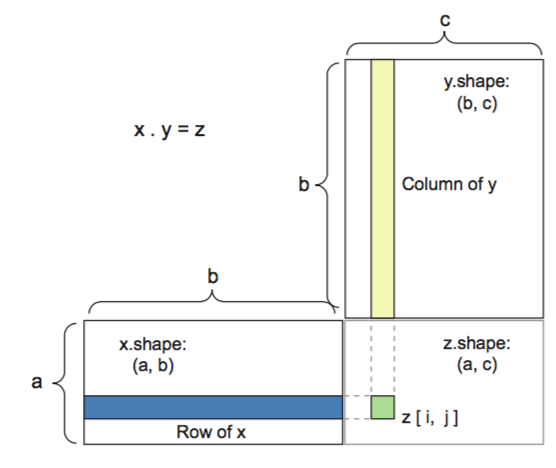

2.3.3 Tensor dot

In mathematics, the dot product or scalar product is an algebraic operation that takes two equal-length sequences of numbers (usually coordinate vectors) and returns a single number. In Euclidean geometry, the dot product of the Cartesian coordinates of two vectors is widely used and often called inner product (or rarely projection product)

You can take the dot product of two matrices x and y (dot(x, y)) if and only if x.shape[1] == y.shape[0]. The result is a matrix with shape (x.shape[0], y.shape[1]), where the coefficients are the vector products between the rows of x and the columns of y.

2.3.4 Tensor reshaping

Reshaping a tensor means rearranging its rows and columns to match a target shape.2.3.6 A geometric interpretation of deep learning

Neural networks consist entirely of chains of tensor operations and that all of these tensor operations are just geometric transformations of the input data.2.4 The engine of neural networks: gradient-based optimization

Each neural layer transforms its input data.

The transformation is depended on the weights or trainable parameters of the layer.

These weights contain the information learned by the network from exposure to training data.

Initially, these weight matrices are filled with small random values (a step called random initialization).

What comes next is to gradually adjust these weights, based on a feedback signal. This gradual adjustment, also called training, is basically the learning that machine learning is all about.

This happens within what’s called a training loop, these steps are repeated as long as necessary:

- Draw a batch of training samples x and corresponding targets y.

- Run the network on x (a step called the forward pass) to obtain predictions y_pred.

- Compute the loss of the network on the batch, a measure of the mismatch between y_pred and y.

- Update all weights of the network in a way that slightly reduces the loss on this batch.

A much better approach is to take advantage of the fact that all operations used in the network are differentiable, and compute the gradient of the loss with regard to the network’s coefficients. You can then move the coefficients in the opposite direction from the gradient, thus decreasing the loss.

2.4.1 What’s a derivative?

If you want to reduce the value of f(x), you just need to move x a little in the opposite direction from the derivative.2.4.2 Derivative of a tensor operation: the gradient

With a function f(W) of a tensor, you can reduce f(W) by moving W in the opposite direction from the gradient: for example,

W1 = W0 - step * gradient(f)(W0)

where step is a small scaling factor.

2.4.3 Stochastic gradient descent

If you update the weights in the opposite direction from the gradient, the loss will be a little less every time.

This is called mini-batch stochastic gradient descent (mini- batch SGD). The term stochastic refers to the fact that each batch of data is drawn at random (stochastic is a scientific synonym of random).

If the parameter under consideration were being optimized via SGD with a small learning rate, then the optimization process would get stuck at the local minimum instead of making its way to the global minimum.

You can avoid such issues by using momentum.

Momentum is implemented by moving the ball at each step based not only on the current slope value (current acceleration)

but also on the current velocity (resulting from past acceleration).

past_velocity = 0

momentum = 0.1 # Constant momentum factor

while loss > 0.01: # Optimization loop

w, loss, gradient = get_current_parameters()

velocity = past_velocity * momentum + learning_rate * gradient

w = w + momentum * velocity - learning_rate * gradient

past_velocity = velocity

update_parameter(w)

2.4.4 Chaining derivatives: the Backpropagation algorithm

Chain rule:

f'(x) = f'(g) * g'(x)

f(W1, W2, W3) = a( W1, b( W2, c(W3) ) )

Thanks to symbolic differentiation, you’ll never have to implement the Backpropagation algorithm by hand.

2.5 Looking back at our first example

3 Getting started with neural networks

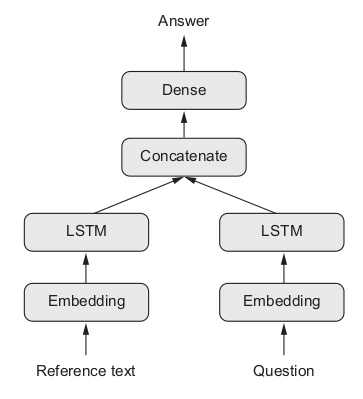

This chapter covers the three most common use cases of neural networks:

- binary classification Classifying results as positive or negative.

- multiclass classification

- scalar regression Estimating the number of a target.

3.1 Anatomy of a neural network

Training a neural network revolves around the following objects:

- Layers, which are combined into a network (or model)

- The input data and corresponding targets

- The loss function, which defines the feedback signal used for learning

- The optimizer, which determines how learning proceeds

3.1.1 Layers: the building blocks of deep learning

Every layer will only accept input tensors of a certain shape and will return output tensors of a certain shape.

For ex.,

from keras import layers

layer = layers.Dense(32, input_shape=(784,))

- The created layer will only accept as input 2D tensors where the first dimension(axis 0) is 784( 28 x 28 ).

- This layer will return a tensor where the first dimension has been transformed to be 32.

- This layer can only be connected to a downstream layer that expects 32 dimensional vectors as its input.

from keras import models

from keras import layers

model = models.Sequential()

model.add(layers.Dense(32, input_shape=(784,)))

model.add(layers.Dense(32))

3.1.2 Models: networks of layers

Picking the right network architecture is more an art than a science, only practice can help you become a proper neural-network architect.

3.1.3 Loss functions and optimizers: keys to configuring the learning process

Once the network architecture is defined, you still have to choose two more things:

- Loss function (objective function) The quantity that will be minimized during training. It represents a measure of success for the task at hand.

- Optimizer Determines how the network will be updated based on the loss function. It implements a specific variant of stochastic gradient descent ( SGD ).

3.2 Introduction to Keras

Keras(https://keras.io) is a deep-learning framework for Python that provides a convenient way to define and train almost any kind of deep-learning model.

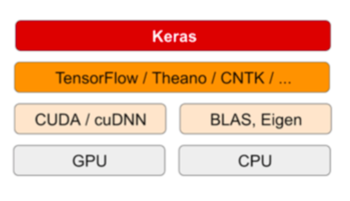

3.2.1 Keras, TensorFlow, Theano, and CNTK

Keras is a model-level library, providing high-level building blocks for developing deep-learning models.

Keras relies on a specialized, well-optimized tensor library to do so, serving as the backend engine of Keras.

Several different backend engines(TensorFlow, Microsoft Cognitive Toolkit/CNTK , Caffe, Theano, Torch, Pytotch) can be plugged seamlessly into Keras.

Any piece of code that you write with Keras can be run with any of these backends without having to change anything in the code.

Keras is able to run seamlessly on both CPUs and GPUs.

- running on CPU TensorFlow is itself wrapping a low-level library for tensor operations called Eigen (http://eigen.tuxfamily.org).

- running on GPU TensorFlow wraps a library of well-optimized deep-learning operations called the NVIDIA CUDA Deep Neural Network library (cuDNN ).

3.2.2 Developing with Keras: a quick overview

The typical Keras workflow looks just like:

- Define your training data: input tensors and target tensors.

- Define a network of layers (or model ) that maps your inputs to your targets. There are two ways to define a model: using the Sequential class (only for linear stacks of layers, which is the most common network architecture by far) or the functional API.

- Configure the learning process by choosing a loss function, an optimizer, and some metrics to monitor. The learning process is configured in the compilation step. For ex.,

- Iterate on your training data by calling the fit() method of your model. Finally, the learning process consists of passing Numpy arrays of input data (and the corresponding target data) to the model via the fit() method

| Sequential class | functional API |

|---|---|

from keras import models from keras import layers model = models.Sequential() model.add(layers.Dense(32, activation='relu', input_shape=(784,))) model.add(layers.Dense(10, activation='softmax')) | from keras import models from keras import layers input_tensor = layers.Input(shape=(784,)) x = layers.Dense(32, activation='relu')(input_tensor) output_tensor = layers.Dense(10, activation='softmax')(x) model = models.Model(inputs=input_tensor, outputs=output_tensor) |

from keras import optimizers

model.compile(optimizer=optimizers.RMSprop(lr=0.001),

loss='mse',

metrics=['accuracy'])

model.fit(input_tensor, target_tensor, batch_size=128, epochs=10)3.3 Setting up a deep-learning workstation

It’s highly recommended that you run deep-learning code on a modern neural network processor because some applications will be excruciatingly slow on CPU , even a fast multicore CPU .

Running cloud GPU instances ( AWS EC2 GPU instance or on Google Cloud Platform) can become expensive over time.

3.3.1 Jupyter notebooks: the preferred way to run deep-learning experiments

It allows you to break up long experiments into smaller pieces that can be executed independently, which makes development interactive and means you don’t have to rerun all of your previous code if something goes wrong late in an experiment.

3.3.2 Getting Keras running: two options

- Use the official EC2 Deep Learning AMI(https://aws.amazon.com/amazon-ai/amis) and run Keras experiments as Jupyter notebooks on EC2 .

- Install everything from scratch on a local Unix workstation. Do this if you already have a high-end NVIDIA GPU .

3.3.3 Running deep-learning jobs in the cloud: pros and cons

3.3.4 What is the best GPU for deep learning?

Using a GPU isn’t strictly necessary, but it’s strongly recommended.

You may sometimes have to wait for several hours for a model to train, instead of mere minutes on a good GPU .

nVidia's GPU

Modern deep-learning frameworks can only run on NVIDIA cards.To use your NVIDIA GPU for deep learning, you need to install two things:- CUDA A set of drivers for your GPU that allows it to run a low-level programming language for parallel computing.

- cu DNN A library of highly optimized primitives for deep learning. When using cu DNN and running on a GPU , you can typically increase the training speed of your models by 50% to 100%.

Please consult the TensorFlow website for detailed instructions about which versions are currently recommended.

Intel's TPU

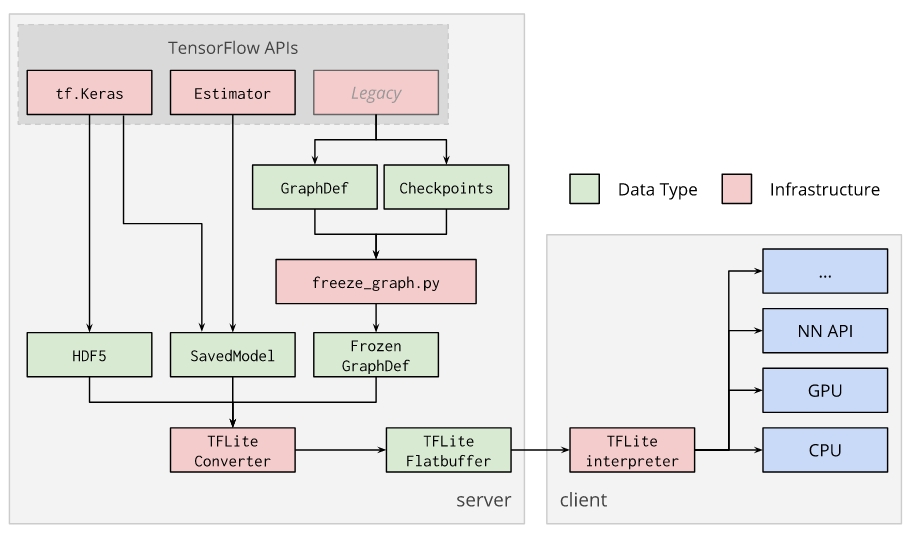

Coral is a new platform, but it’s designed to work seamlessly with TensorFlow.To bring TensorFlow models to Coral you can use TensorFlow Lite, a toolkit for running machine learning inference on edge devices.

- Pre-trained models The Coral website provides pre-trained TensorFlow Lite models that have been optimized to use with Coral hardware.

- Retrain a model You can customize Coral’s pre-trained machine learning models to recognize your own images and objects. To do this, follow the instructions in Retrain an existing model.

- Build your own TensorFlow model You can follow the steps in Build a new model for the Edge TPU. Note that your model must meet the Model requirements. To prepare your model for the Edge TPU,

- You’ll first convert and optimize it for edge devices with the TensorFlow Lite Converter.

The TensorFlow Lite converter is used to convert TensorFlow models into an optimized FlatBuffer format, so that they can be used by the TensorFlow Lite interpreter.

The converter supports the following input formats:

- SavedModels

- Frozen GraphDef: Models generated by freeze_graph.py.

- tf.keras HDF5 models.

- Any model taken from a tf.Session (Python API only).

The TensorFlow Lite Converter can perform quantization on any trained TensorFlow model. You can read more about this technique in Post-training quantization.

- You’ll then compile the model for the Edge TPU with the Edge TPU Model Compiler.

- You cannot train a model directly with TensorFlow Lite; instead you must convert your model from a TensorFlow file (such as a .pb file) to a TensorFlow Lite file (a .tflite file), using the TensorFlow Lite converter.

- Tensors are either 1-, 2-, or 3-dimensional in the models for the Edge TPU.

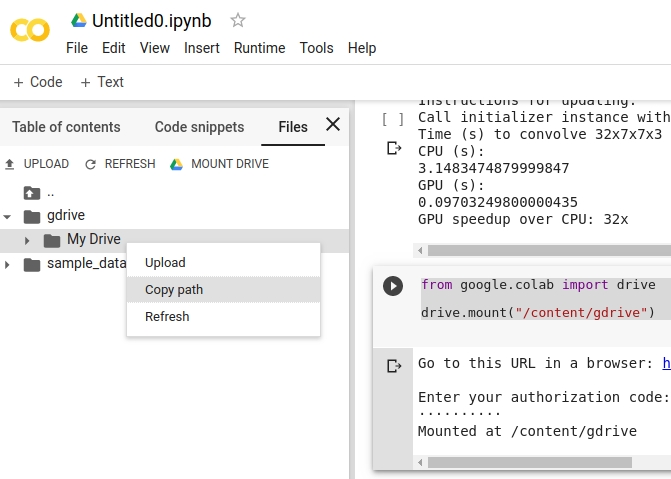

Cloud TPU : Colab. Setting up Google Colab and training Model using TensorFlow and Keras

Google Colab or Google Colabotary is the platform which allows you to train your machine learning or Tensorflow project on GPU for free.

Just log in to your google account and open a Google Colab, it will open a python notebook just like jupyter, and then you can train your model from there.

- open a Google Drive and create a folder Create a folder named Colab, then, create three more folder Pickle, Logs and Models. Then upload pickle files in Pickle folder and we will use Logs and Models folder to store logs and models file.

- open google Colab Click on new python 3 notebook.

- HW runtime setting Clicking Runtime then select change runtime type, set hardware accelerator type to be GPU from there.

- link your Google Drive with Colab To let Colab use google drive to save all your logs and models, execute:

from google.colab import drive

drive.mount("/content/gdrive")

Mounted at /content/gdrive

this copied text is the path of your HOME on Google Drive:

this copied text is the path of your HOME on Google Drive:

/content/gdrive/My Drive

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Dropout, Activation, Flatten

from tensorflow.keras.layers import Conv2D, MaxPooling2D

from tensorflow.keras.callbacks import TensorBoard

from tensorflow.keras.utils import to_categorical

import pickle

import time

MODEL_NAME = "3-conv-128-layer-dense-1-out-2-softmax-categorical-cross-2-CNN"

pickle_in = open("/content/drive/My Drive/Projects/cat vs dog/Xv.pickle","rb")

X = pickle.load(pickle_in)

pickle_in = open("/content/drive/My Drive/Projects/cat vs dog/Y.pickle","rb")

y = pickle.load(pickle_in)

y = to_categorical(y)

X = X/255.0

model = Sequential()

model.add(Conv2D(128, (3, 3), input_shape=(50,50,1)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.3))

model.add(Conv2D(128, (3, 3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.3))

model.add(Conv2D(128, (3, 3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.3))

model.add(Flatten())

model.add(Dense(128))

model.add(Activation('relu'))

model.add(Dense(2))

model.add(Activation('softmax'))

tensorboard = TensorBoard(log_dir="/content/gdrive/My Drive/Colab/Logs/{}".format(MODEL_NAME))

model.compile(loss='categorical_crossentropy',

optimizer='adam',

metrics=['accuracy'],

)

model.fit(X, y,

batch_size=32,

epochs=10,

validation_split=0.3,

callbacks=[tensorboard])

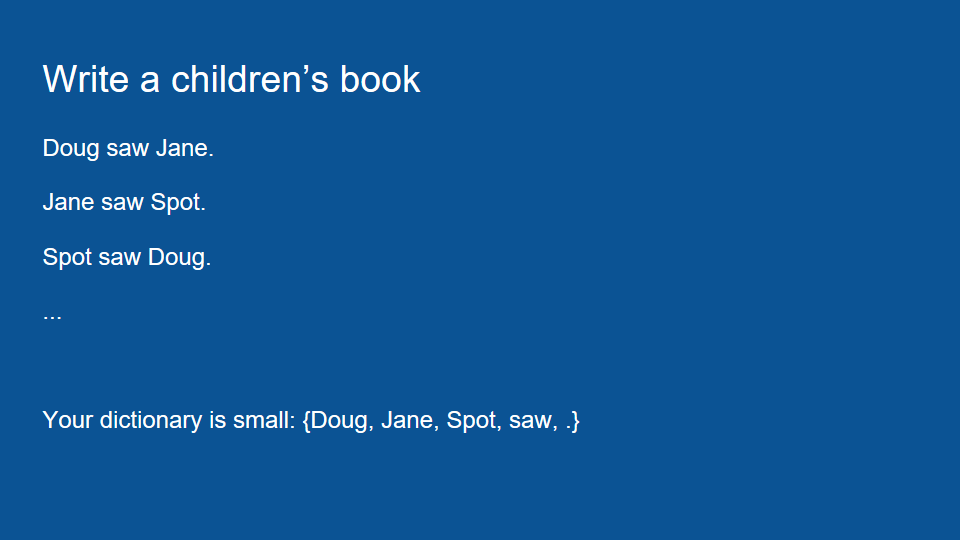

3.4 Classifying movie reviews: a binary classification example

In this example, you’ll learn to classify movie reviews as positive or negative, based on the text content of the reviews.3.4.1 The IMDB dataset

The IMDB dataset comes packaged with Keras. It has already been preprocessed:- the reviews (sequences of words) have been turned into sequences of integers, where each integer stands for a specific word in a dictionary.

- words are indexed by overall frequency in the dataset, so that for instance the integer "3" encodes the 3rd most frequent word in the data.

- "0" does not stand for a specific word, but instead is used to encode any unknown word.

from keras.datasets import imdb

(train_data, train_labels), (test_data, test_labels) = imdb.load_data(num_words=10000)

- The argument num_words=10000 means you’ll only keep the top 10,000 most frequently occurring words in the training data.

- The variables train_data and test_data are lists of reviews Each review is a list of word indices (encoding a sequence of words)

- train_labels and test_labels are lists of 0s and 1s 0 stands for negative and 1 stands for positive

word_index = imdb.get_word_index()

index_word = dict( [ (value, key) for (key, value) in word_index.items() ] )

decoded_review = ' '.join( [ index_word.get(i - 3, '?') for i in train_data[0] ] )

- imdb.get_word_index() Download the dictionary mapping from Downloading data from https://s3.amazonaws.com/text-datasets/imdb_word_index.json then return a dictionary where key are words (str) and values are indexes (integer). For ex., word_index["giraffe"] might return 1234.

- index_word is a dictionary mapping integer indices to words

- Note that the indices are offset by 3 because 0, 1, and 2 are reserved indices for “padding,” “start of sequence,” and “unknown.”

train_data[0][1]

14

index_word.get(14-3)

'this'

shoes=["adidas","ascis","nike"]

print('-'.join(shoes))

adidas-ascis-nike

3.4.2 Preparing the data

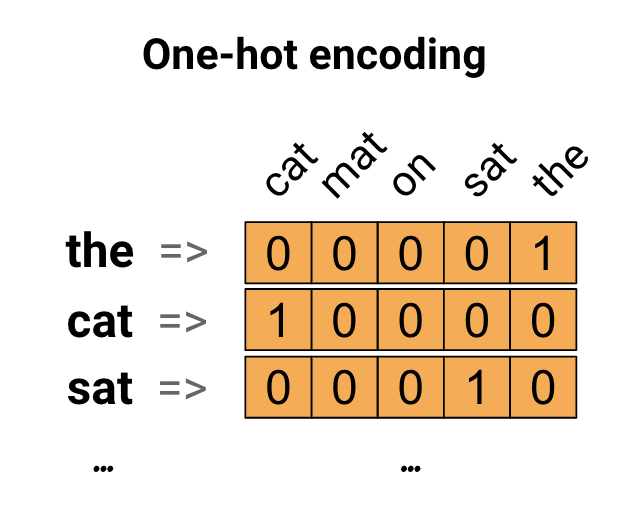

Each review is a list of word indices. We can’t feed lists of integers into a neural network which needs numerical values.One-hot encode your lists to turn them into vectors of 0s and 1s.

For instance, turning the sequence [3, 5] into a 10,000-dimensional vector that would be all 0s except for indices 3 and 5, which would be 1s.

import numpy as np

# data is a list of list:

# the 1st axix is the sample index,

# the 2nd axix is the review

def vectorize_sequences(sequences, dimension=10000):

#Creates an all-zero matrix of shape (len(sequences), dimension)

results = np.zeros((len(sequences), dimension))

for i, sequence in enumerate(sequences):

results[i, sequence] = 1.

return results

x_train = vectorize_sequences(train_data)

x_test = vectorize_sequences(test_data)

It allows us to loop over something and have an automatic counter.

for counter, list_value in enumerate(some_list):

print(counter, list_value)

If a word's index occurs in a sentence, it is coded 1.

The for loop use each review as the Boolean mask to encode the count of each word.

You should also vectorize your labels:

y_train = np.asarray(train_labels).astype('float32')

y_test = np.asarray(test_labels).astype('float32')

NumPy provides an N-dimensional array type, the ndarray, which describes a collection of “items” of the same type.

All ndarrays are homogenous: every item takes up the same size block of memory, and all blocks are interpreted in exactly the same way.

- numpy.asarray(a, dtype=None, order=None) Convert the input to an NumPy array. NumPy 裡面的 Array 與 Python 原生 List 不同,他是固定大小的,不像 Python List 可以動態增減。

- ndarray.astype(dtype, order='K', casting='unsafe', subok=True, copy=True) Copy of the array, cast to a specified type. NumPy 所有元件都需要是相同大小的,如此才能在記憶體有相同的 Size。

3.4.3 Building your network

keras.layers.Dense(units, activation=None, use_bias=True, kernel_initializer='glorot_uniform', bias_initializer='zeros', kernel_regularizer=None, bias_regularizer=None, activity_regularizer=None, kernel_constraint=None, bias_constraint=None)

- units Positive integer, dimensionality of the output space. (the same as the number of hidden units in this layer)

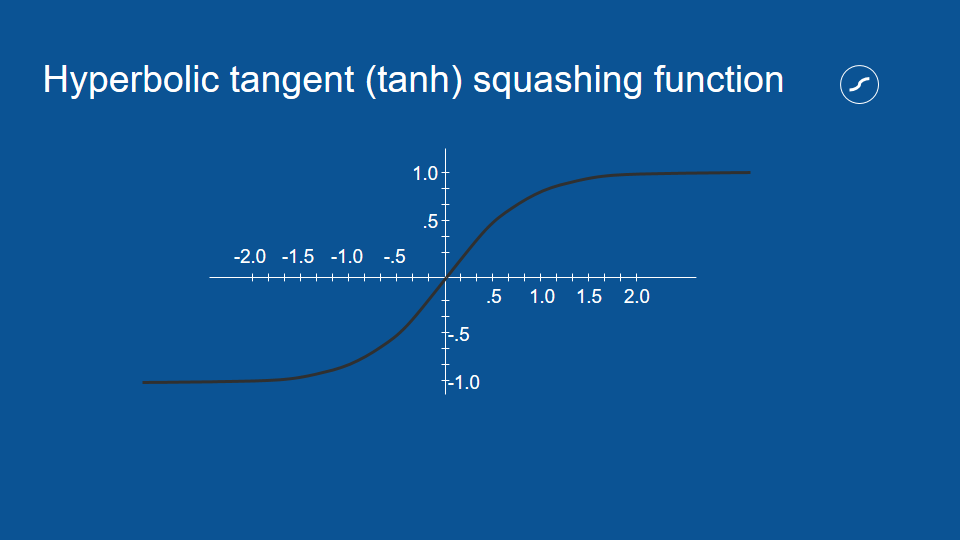

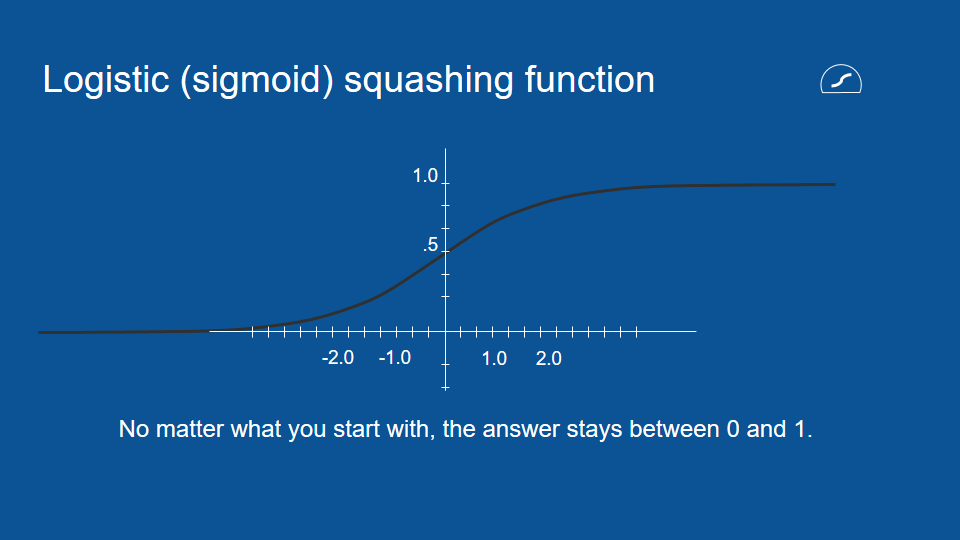

- activation Activation function to use. If you don't specify anything, no activation is applied (ie. "linear" activation: a(x) = x). Networks with a linear activation function are effectively only one layer deep, regardless of how complicated their architecture is. Real world and real problems are nonlinear . To make the incoming data non linear we use non linear mapping called activation function .

output = activation(dot(input, kernel) + bias)

- activation is the element-wise activation function passed as the activation argument

- kernel is a weights matrix created by the layer

- bias is a bias vector created by the layer (only applicable if use_bias is True)

Dense(16, activation='relu')16 is the number of hidden units of the layer.

Having 16 hidden units means the weight matrix W will have shape (input_dimension, 16), the dot product with W will project the input data onto a 16-dimensional representation space(and then you’ll add the bias vector b and apply the relu operation).

Having more hidden units allows your network to learn more-complex representations, but it makes the network more computationally expensive and may lead to learning unwanted patterns (patterns that will improve performance on the training data but not on the test data).

Therefore, there are two key architecture decisions to be made about such a stack of Dense layers:

- How many layers to use

- How many hidden units to choose for each layer

- Two intermediate layers with 16 hidden units each The intermediate layers will use relu as their activation function

- A third layer that will output the scalar prediction regarding the sentiment of the current review The final layer will use a sigmoid activation so as to output a probability (a score between 0 and 1, indicating how likely the sample is to have the target “1”(positive)

You can create a Sequential model by passing a list of layer instances to the constructor.

Here’s the Keras implementation,

from keras import models

from keras import layers

model = models.Sequential()

model.add(layers.Dense(16, activation='relu', input_shape=(10000,)))

model.add(layers.Dense(16, activation='relu'))

model.add(layers.Dense(1, activation='sigmoid'))

- An optimizer. This could be the string identifier of an existing optimizer (such as rmsprop or adagrad), or an instance of the Optimizer class.

- A loss function. This is the objective that the model will try to minimize. It can be the string identifier of an existing loss function (such as categorical_crossentropy or mse), or it can be an objective function.

- A list of metrics. For any classification problem you will want to set this to metrics=['accuracy']. A metric could be the string identifier of an existing metric or a custom metric function.

Here’s the step to configure the model with the rmsprop optimizer and the binary_crossentropy loss function.

model.compile(optimizer='rmsprop',

loss='binary_crossentropy',

metrics=['accuracy'])

3.4.4 Validating your approach

Keras models are trained on Numpy arrays of input data and labels. For training a model, you will typically use the fit function.

In order to monitor the accuracy of the model on data it has never seen(been trained) before during training, you’ll create a validation set by setting apart 10,000 samples from the original training data.

# data to be used to train the model

partial_x_train = x_train[10000:]

partial_y_train = y_train[10000:]

# data to be used to evaluate the model during the training

x_val = x_train[:10000]

y_val = y_train[:10000]

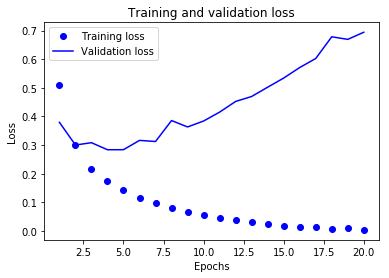

You’ll now train the model for 20 epochs (20 iterations over all samples in the x_train and y_train tensors), in mini-batches of 512 samples.

At the same time, you’ll monitor loss and accuracy on the 10,000 samples that you set apart. You do so by passing the validation data as the validation_data argument.

history = model.fit(partial_x_train, partial_y_train,

epochs=20,

batch_size=512,

validation_data=(x_val, y_val))

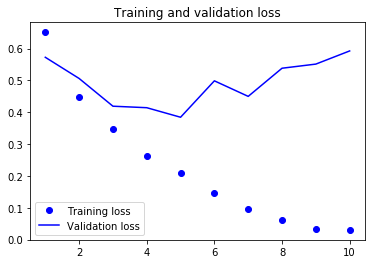

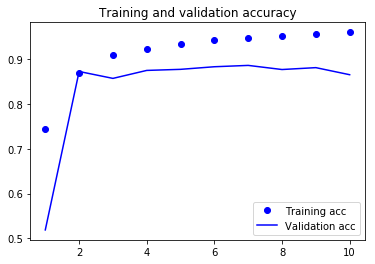

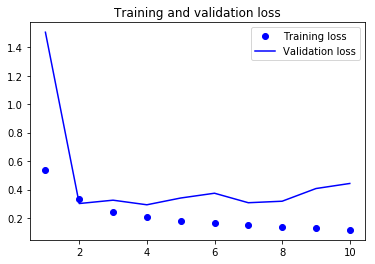

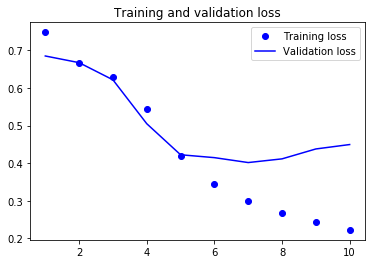

Train on 15000 samples, validate on 10000 samples Epoch 1/20 15000/15000 [==============================] - 6s 394us/step - loss: 0.5084 - acc: 0.7813 - val_loss: 0.3797 - val_acc: 0.8684 ... Epoch 20/20 15000/15000 [==============================] - 3s 188us/step - loss: 0.0041 - acc: 0.9999 - val_loss: 0.6941 - val_acc: 0.8658

Note that the call to model.fit() returns a History object which has a member history.

It is a dictionary contains four entries: one per metric that was being monitored during training and during validation.

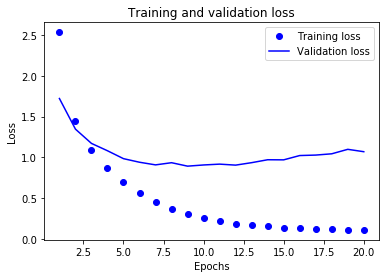

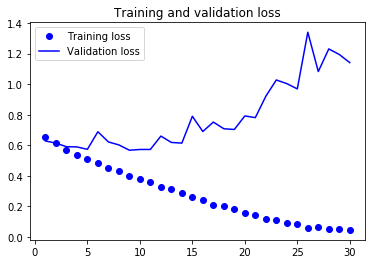

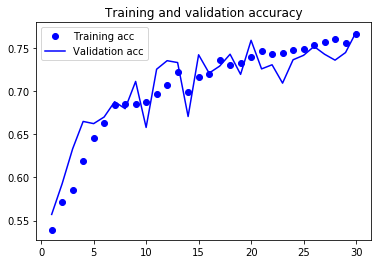

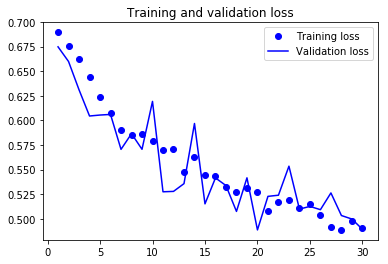

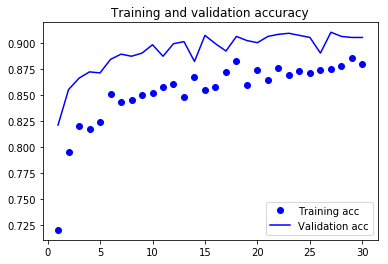

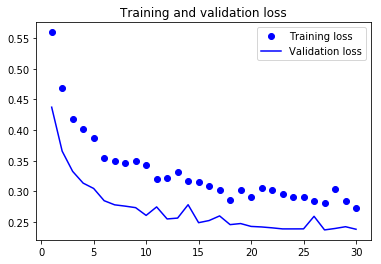

Let’s use Matplotlib to plot the training and validation loss side by side

import matplotlib.pyplot as plt

history_dict = history.history

loss_values = history_dict['loss']

val_loss_values = history_dict['val_loss']

acc = history_dict['acc']

epochs = range(1, len(acc) + 1)

plt.plot(epochs, loss_values, 'bo', label='Training loss') # “bo” is for “blue dot.”

plt.plot(epochs, val_loss_values, 'b', label='Validation loss') # “b” is for “solid blue line.”

plt.title('Training and validation loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend()

plt.show()

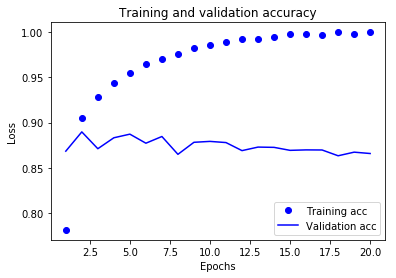

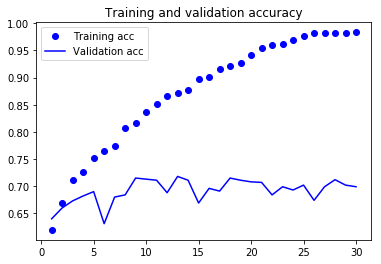

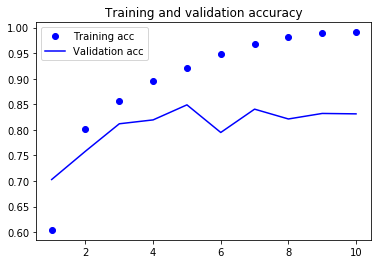

To plot the accuracy:

plt.clf() #Clears the figure

acc_values = history_dict['acc']

val_acc_values = history_dict['val_acc']

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc_values, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend()

plt.show()()

plt.show()

As you can see, the training loss decreases with every epoch, and the training accuracy increases with every epoch.

But that isn’t the case for the validation loss and accuracy: the validation loss is increased and the validation accuracy is decreased after the 5-th epoch.

This is overfitting: after the 2nd epoch, you’re overoptimizing on the training data.

In this case, to prevent overfitting, you could stop training earlier after 5 epochs.

model = models.Sequential()

model.add(layers.Dense(16, activation='relu', input_shape=(10000,)))

model.add(layers.Dense(16, activation='relu'))

model.add(layers.Dense(1, activation='sigmoid'))

model.compile(optimizer='rmsprop',

loss='binary_crossentropy',

metrics=['accuracy'])

model.fit(x_train, y_train, epochs=5, batch_size=512)

results = model.evaluate(x_test, y_test)

results

Out[29]:

[0.31565191437244416, 0.87944]

model.evaluate(x=None, y=None, batch_size=None, verbose=1, sample_weight=None, steps=None, callbacks=None, max_queue_size=10, workers=1, use_multiprocessing=False)

Returns the loss value and metrics values for the model in test mode.

- x: Input data

- y: Target data

- batch_size: Integer or None.

- verbose: 0 or 1.

3.4.5 Using a trained network to generate predictions on new data

You can generate the likelihood of reviews being positive by using the predict method:

model.predict(x_test)

array([[ 0.1981305 ],

[ 0.99992895],

[ 0.72518015],

...,

[ 0.12744974],

[ 0.05118057],

[ 0.70532745]], dtype=float32)

but less confident for others (0.6, 0.4).

3.4.6 Further experiments

3.4.7 Wrapping up

- You usually need to do quite a bit of preprocessing on your raw data in order to be able to feed it—as tensors—into a neural network.

- Stacks of Dense layers with relu activations can solve a wide range of problems (including sentiment classification)

- In a binary classification problem (two output classes), your network should end with a Dense layer with one unit and a sigmoid activation: the output of your network should be a scalar between 0 and 1, encoding a probability. With such a scalar sigmoid output on a binary classification problem, the loss function you should use is binary_crossentropy.

- The rmsprop optimizer is generally a good enough choice, whatever your problem.

- To avoid the overfitting, be sure to always monitor performance on data that is outside of the training set.

3.5 Classifying newswires: a multiclass classification example

In this section, you’ll build a network to classify Reuters newswires into 46 mutually exclusive topics.

The Reuters dataset is a set of short newswires and their topics, published by Reuters in 1986. It’s a simple, widely used toy dataset for text classification. There are 46 different topics.

the Reuters dataset comes packaged as part of Keras.

from keras.datasets import reuters

(train_data, train_labels), (test_data, test_labels) = reuters.load_data(

num_words=10000)

>>> len(train_data)

8982

>>> len(test_data)

2246

Here’s how you can decode train_data[0] back to words,

word_index = reuters.get_word_index()

index_word = dict([(value, key) for (key, value) in word_index.items()])

decoded_newswire = ' '.join([index_word.get(i - 3, '?') for i in train_data[0]])

3.5.2 Preparing the data

Each short news is a list of word indices.

We need to encode the data in a value

import numpy as np

def vectorize_sequences(sequences, dimension=10000):

results = np.zeros( (len(sequences), dimension) )

for i, sequence in enumerate(sequences):

results[i, sequence] = 1.

return results

x_train = vectorize_sequences(train_data)

x_test = vectorize_sequences(test_data)

x_train.shape

(8982, 10000)

x_test.shape

(2246, 10000)

There are some methods to use the one-hot encoding of the labels: each label as an all-zero vector with a 1 in the place of the label index.

- a built-in way to do this in Keras

from keras.utils.np_utils import to_categorical

one_hot_train_labels = to_categorical(train_labels)

one_hot_test_labels = to_categorical(test_labels)

def to_one_hot(labels, dimension=46):

results = np.zeros( (len(labels), dimension) )

for i, label in enumerate(labels):

results[i, label] = 1.

return results

one_hot_train_labels = to_one_hot(train_labels)

one_hot_test_labels = to_one_hot(test_labels

3.5.3 Building your network

In the previous example, you used 16-dimensional intermediate layers, but a 16-dimensional space may be too limited to learn to separate 46 different classes: such small layers may act as information bottlenecks, therefore, let’s go with 64 units.

from keras import models

from keras import layers

model = models.Sequential()

model.add(layers.Dense(64, activation='relu', input_shape=(10000,)))

model.add(layers.Dense(64, activation='relu'))

model.add(layers.Dense(46, activation='softmax'))

- You end the network with a Dense layer of size 46 The network will output a 46-dimensional vector.

- The last layer uses a softmax activation. For every input sample, the network will produce a 46- dimensional output vector, where output[i] is the probability that the sample belongs to class i. The 46 scores will sum to 1.

model.compile(optimizer='rmsprop',

loss='categorical_crossentropy',

metrics=['accuracy'])

3.5.4 Validating your approach

# Set validation set

x_val = x_train[:1000]

y_val = one_hot_train_labels[:1000]

# Set trained set

partial_x_train = x_train[1000:]

partial_y_train = one_hot_train_labels[1000:]

# train the network for 20 epochs.

history = model.fit(partial_x_train,

partial_y_train,

epochs=20,

batch_size=512,

validation_data=(x_val, y_val))

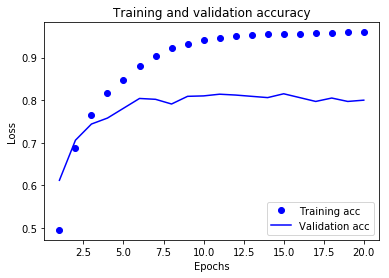

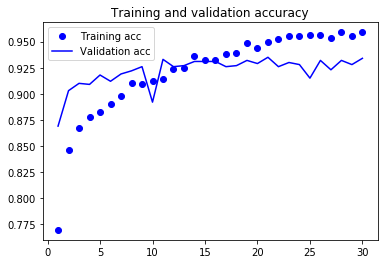

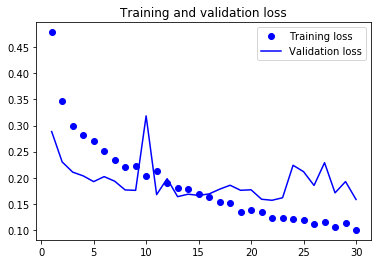

import matplotlib.pyplot as plt

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(1, len(loss) + 1)

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend()

plt.show()

Plotting the training and validation accuracy

# Clears the figure

plt.clf()

acc = history.history['acc']

val_acc = history.history['val_acc']

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend()

plt.show()

The network begins to overfit after 6-th epoch.

Let’s train a new network from scratch for 6 epochs and then evaluate it on the test set.

model = models.Sequential()

model.add(layers.Dense(64, activation='relu', input_shape=(10000,)))

model.add(layers.Dense(64, activation='relu'))

model.add(layers.Dense(46, activation='softmax'))

model.compile(optimizer='rmsprop',

loss='categorical_crossentropy',

metrics=['accuracy'])

model.fit(partial_x_train,

partial_y_train,

epochs=6,

batch_size=512,

validation_data=(x_val, y_val))

test_loss, test_accu = model.evaluate(x_test, one_hot_test_labels)

test_loss, test_accu

(0.99986814900582532, 0.77649154056954994)

3.5.5 Generating predictions on new data

predictions = model.predict(x_test)

predictions.shape # 2246 test inputs, each with 46 possible outcomes

(2246, 46)

np.sum(predictions[0]) # the sum of the probability of all outcomes for the 1st input

1.0

np.argmax(predictions[0]) # the class with the highest probability for the 1st imput

3

predictions[0][3] # the probability of the most possible outcome

0.74235409

3.5.6 A different way to handle the labels and the loss

If integer labels are used, you should use sparse_categorical_ crossentropy:

model.compile(optimizer='rmsprop', loss='sparse_categorical_crossentropy', metrics=['acc'])

3.5.7 The importance of having sufficiently large intermediate layers

You will introduce an information bottleneck by having intermediate layers that are significantly less than the input's dimensions.

The validation accuracy will have a significant drop. This drop is mostly due to the fact that you’re trying to compress a lot of information into an intermediate space that is too low-dimensional.

3.5.8 Further experiments

3.5.9 Wrapping up

3.6 Predicting house prices: a regression example

To predict a continuous value instead of a discrete label problem is called regression.

For instance, predicting the temperature tomorrow.

3.6.1 The Boston Housing Price dataset

The dataset has relatively few data points: only 506, split between 404 training samples and 102 test samples. And each feature in the input data (for example, the crime rate) has a different scale.

To load the Boston housing dataset

from keras.datasets import boston_housing

(train_data, train_targets), (test_data, test_targets) = boston_housing.load_data()

train_data.shape

(404, 13) # 404 training samples, 13 features

test_data.shape

(102, 13) # 102 test samples, 13 features

train_targets # the median values of owner-occupied homes ( in thousands of dollars)

[ 15.2, 42.3, 50. ... 19.4, 19.4, 29.1]

3.6.2 Preparing the data

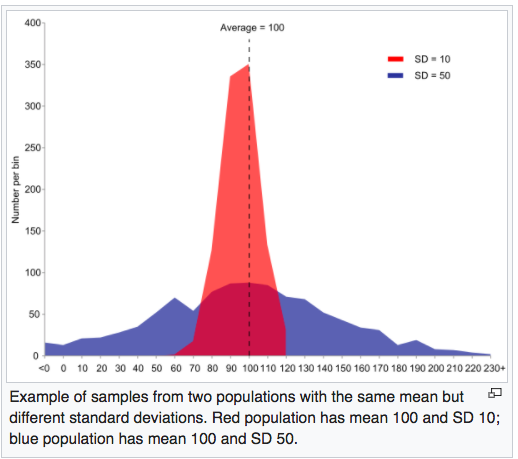

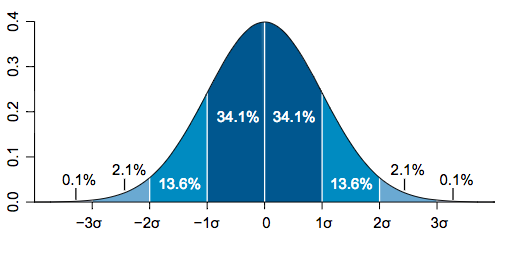

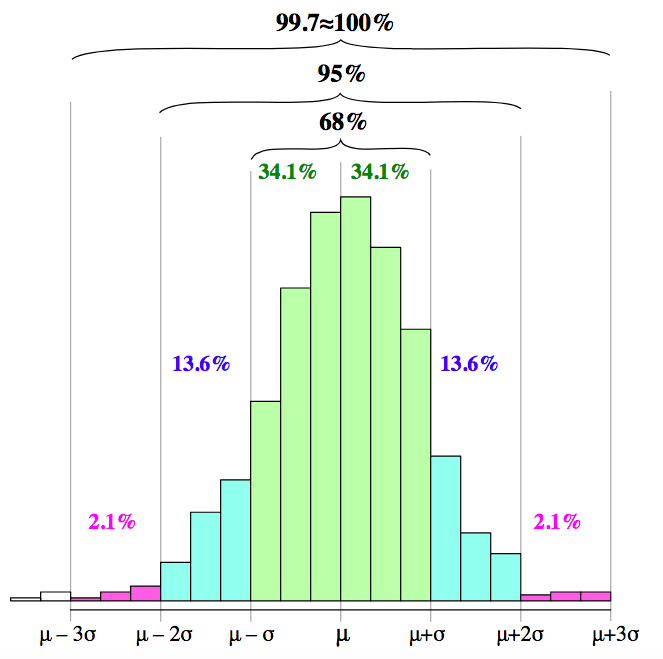

A widespread best practice to deal with data having wildly different ranges is to do do feature-wise normalization: for each feature in the input data (a column in the input data matrix), you subtract the mean of the feature and divide by the standard deviation, so that the feature's distribution is centered around 0 and has a unit standard deviation.

(x - Mean) / Standard Deviation

the 68–95–99.7 rule is a shorthand used to remember the percentage of values

把一個資料看成是一個樣本空間上的一點, 資料的n個feature就對應到n維空間上的每個座標上.

在樣本空間上,所有點座標其分量都相等的點(u, u, u, ...)所成的集合可被視為通過原點的對角線. 2維空間有2條對角線, 3維空間有3條對角線.

標準差可以被視為一個從n維空間的一個點到一條直線的距離的函數.

mean = train_data.mean(axis=0)

std = train_data.std(axis=0)

train_data -= mean

train_data /= std

test_data -= mean

test_data /= std

>>> print(results)

[[0. 0. 0. 0.]

[1. 1. 1. 0.]

[0. 0. 0. 0.]]

>>> results.mean(axis=0)

array([0.33333333, 0.33333333, 0.33333333, 0. ])

>>> results.mean(axis=1)

array([0. , 0.75, 0. ])

3.6.3 Building your network

In general, the less training data you have, the worse overfitting will be, and using a small network is one way to mitigate overfitting.We use a very small network with two hidden layers, each with 64 units.

from keras import models

from keras import layers

# you use a function to construct it so that you can instantiate the same model multiple times

def build_model():

model = models.Sequential()

model.add(layers.Dense(64, activation='relu',

input_shape=(train_data.shape[1],)))

model.add(layers.Dense(64, activation='relu'))

model.add(layers.Dense(1))

model.compile(optimizer='rmsprop', loss='mse', metrics=['mae'])

return model

- The network ends with a single unit and no activation (it will be a linear layer). This is a typical setup for scalar regression (a regression where you’re trying to predict a single continuous value).

- the mse (mean squared error) loss function is used

- metric used for monitoring during training is mean absolute error (MAE)

3.6.4 Validating your approach using K-fold validation

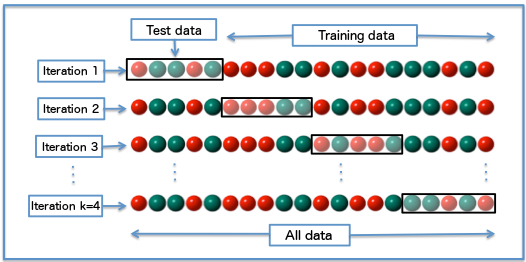

The validation scores might have a high variance with regard to the validation split because the data points are too few.The best practice in such situations is to use K-fold cross-validation. ( sometimes called rotation estimation )

One of the main reasons for using cross-validation instead of using the conventional validation (e.g. partitioning the data set into two sets of 70% for training and 30% for test) is that there is not enough data available to partition it into separate training and test sets without losing significant modelling or testing capability.

It consists of:

- splitting the available data into K partitions

- instantiating K identical models, and training each one on K – 1 partitions while evaluating on the remaining partition

- The validation score for the model used is then the average of the K validation scores

import numpy as np

k=4

num_val_samples = int( len(train_data) / k )

num_epochs = 100

all_scores = []

for i in range(k):

# Prepares the validation data: data from partition #k

val_data = train_data[i * num_val_samples: (i + 1) * num_val_samples]

val_targets = train_targets[i * num_val_samples: (i + 1) * num_val_samples]

# Prepares the training data: data from all other partitions

if ( i==0 ):

partial_train_data = train_data[num_val_samples:]

partial_train_targets = train_targets[num_val_samples:]

else:

partial_train_data = np.concatenate(

[train_data[:i * num_val_samples],

train_data[(i + 1) * num_val_samples:]], axis=0)

partial_train_targets = np.concatenate( [train_targets[:i * num_val_samples],

train_targets[(i + 1) * num_val_samples:]], axis=0)

# Builds the Keras model (already compiled)

model = build_model()

# Trains the model ( in silent mode, verbose=0 )

history = model.fit(partial_train_data, partial_train_targets,

validation_data=(val_data, val_targets),

epochs=num_epochs, batch_size=1, verbose=0)

val_mse, val_mae = model.evaluate(val_data, val_targets, verbose=0)

all_scores.append(val_mae)

numpy.concatenate( (a1, a2, ...), axis=0, out=None):

Join a sequence of arrays along an existing axis.

The results:

>>> all_scores

[2.1638144738603344, 2.8611690266297596, 2.552104407017774, 2.3926332126749625]

>>> np.mean(all_scores)

2.4924302800457077

Let’s try training the network a bit longer: 500 epochs.

num_epochs = 500

all_mae_histories = []

for i in range(k):

print('processing fold #', i, '/', k)

# Prepare the validation data: data from partition #i

val_data = train_data[i * num_val_samples: (i + 1) * num_val_samples]

val_targets = train_targets[i * num_val_samples: (i + 1) * num_val_samples]

# Prepares the training data: data from all other partitions

if ( i==0 ):

partial_train_data = train_data[num_val_samples:]

partial_train_targets = train_targets[num_val_samples:]

else:

partial_train_data = np.concatenate(

[train_data[:i * num_val_samples],

train_data[(i + 1) * num_val_samples:]], axis=0)

partial_train_targets = np.concatenate( [train_targets[:i * num_val_samples],

train_targets[(i + 1) * num_val_samples:]], axis=0)

print(len(val_data), len(partial_train_data))

# Builds the Keras model (already compiled)

model = build_model()

# Trains the model ( in silent mode, verbose=0 )

history = model.fit(partial_train_data, partial_train_targets,

validation_data=(val_data, val_targets),

epochs=num_epochs, batch_size=1, verbose=0)

mae_history = history.history['val_mean_absolute_error']

all_mae_histories.append(mae_history)

type(all_mae_histories)

list

len(all_mae_histories)

4

len(all_mae_histories[0])

500

average_mae_history = [

np.mean([x[i] for x in all_mae_histories]) for i in range(num_epochs)]

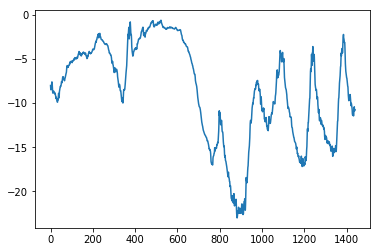

import matplotlib.pyplot as plt

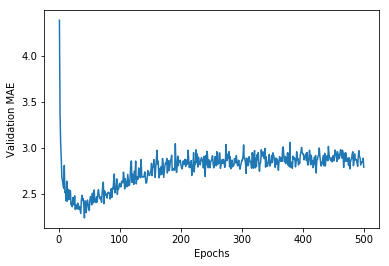

plt.plot(range(1, len(average_mae_history) + 1), average_mae_history)

plt.xlabel('Epochs')

plt.ylabel('Validation MAE')

plt.show()

It may be a little difficult to see the plot, due to scaling issues and relatively high variance.

We can find the minimum MAE of each data fold and correspond indexes:

for i in range(4):

print( all_mae_histories[i].index( min(all_mae_histories[i]) ),':', min(all_mae_histories[i]) )

32 : 1.81601905823

41 : 2.20111931433

39 : 2.3219555581

51 : 2.347481907

Therefore, the minimum happened before 51 epochs. Past that point, you start overfitting.

We can train a final production model on all of the training data, with the best parameters, and then look at its performance on the test data.

model = build_model()

model.fit(train_data, train_targets, epochs=60, batch_size=16, verbose=0)

test_mse_score, test_mae_score = model.evaluate(test_data, test_targets)

test_mae_score

2.8500603601044299

3.6.5 Wrapping up

- Mean squared error (MSE) is a loss function commonly used for regression.

- A common regression metric is mean absolute error (MAE).

- When features in the input data have values in different ranges, each feature should be scaled independently as a preprocessing step.

- When there is little data available, using K-fold validation is a great way to reliably evaluate a model.

- When little training data is available, it’s preferable to use a small network with few hidden layers (typically only one or two), in order to avoid severe overfitting.

4 Fundamentals of machine learning

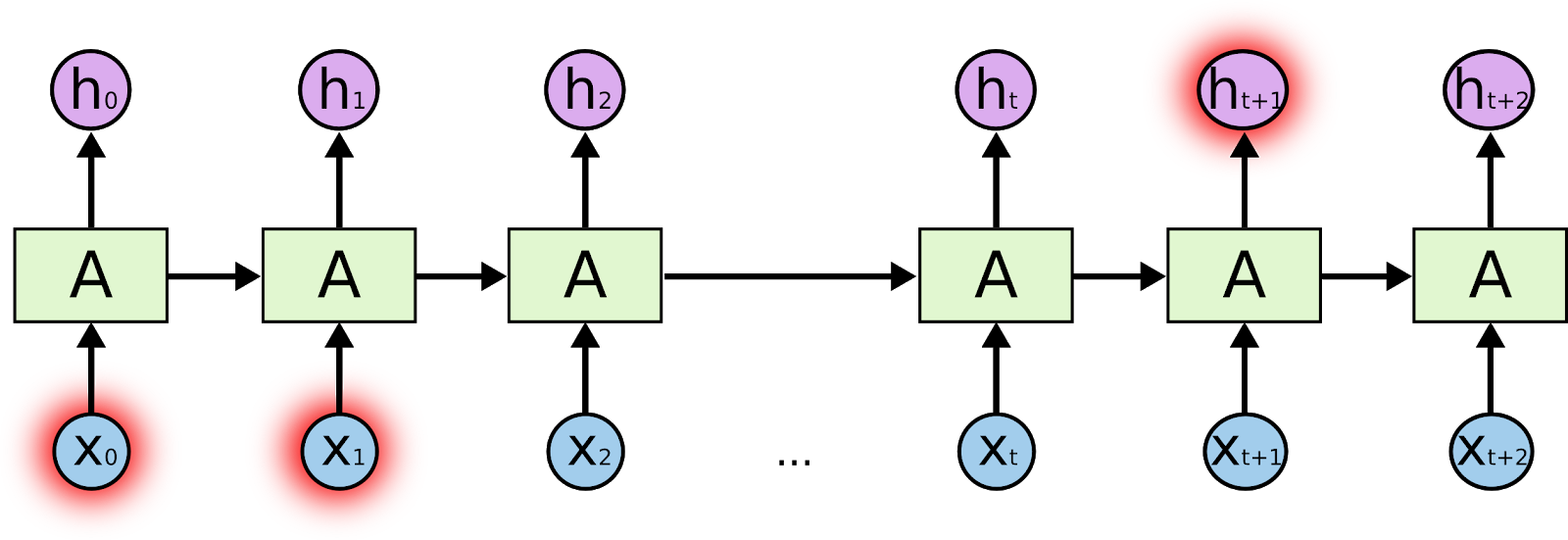

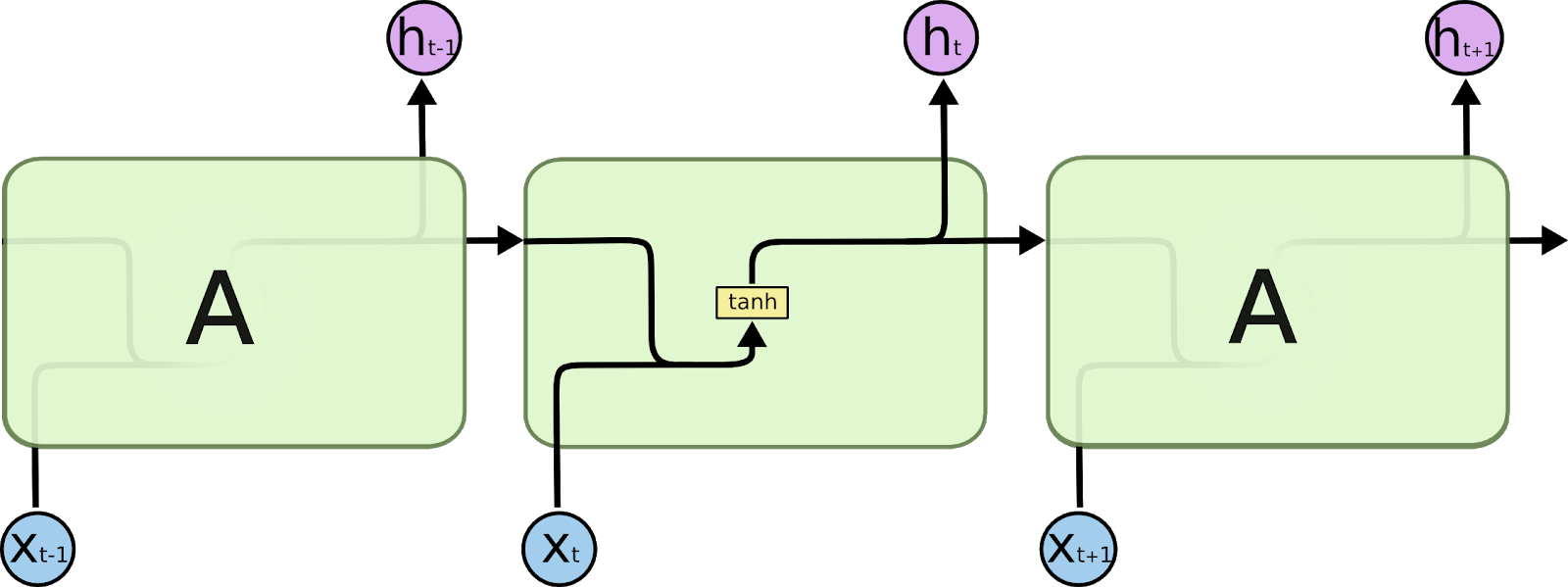

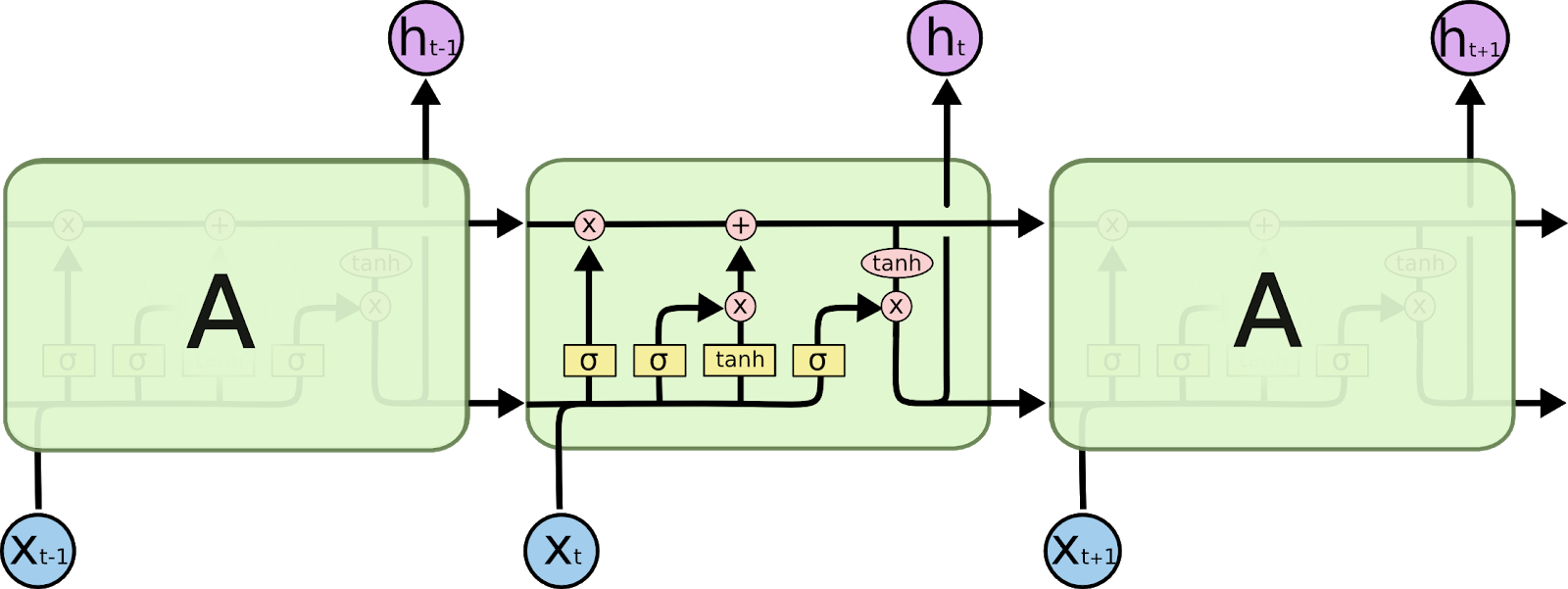

4.1 Four branches of machine learning

4.1.1 Supervised learning

It consists of learning to map input data to known targets.4.1.2 Unsupervised learning

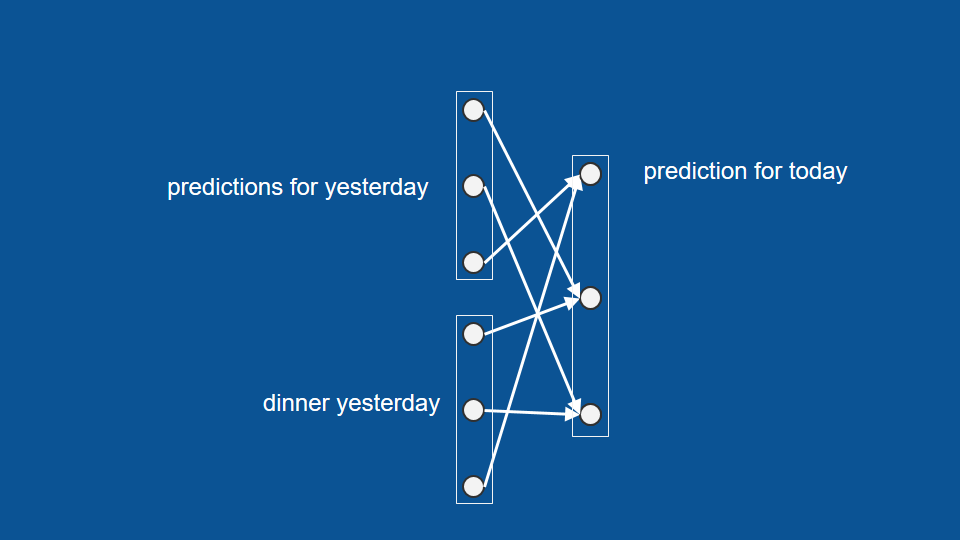

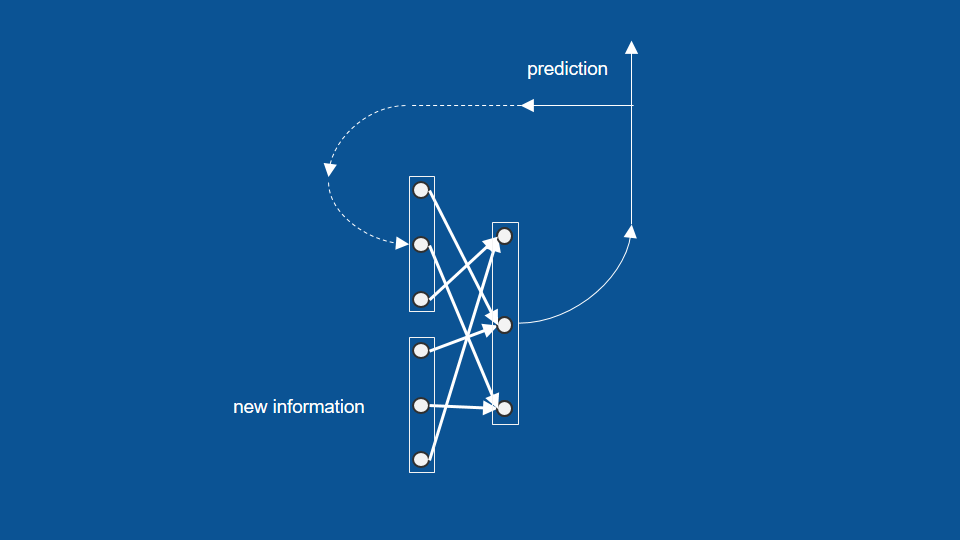

It consists of finding interesting transformations of the input data without the help of any targets.4.1.3 Self-supervised learning

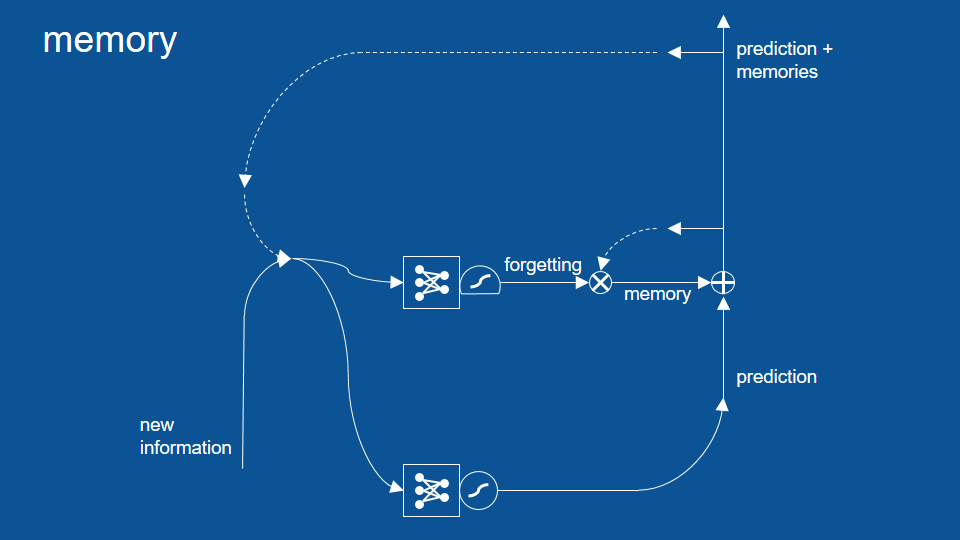

It is supervised learning without any humans in the loop.

It's a kind of learning based on previous input.

4.1.4 Reinforcement learning

An agent receives information about its environment and learns to choose actions that will maximize some reward.4.2 Evaluating machine-learning models

The data is splitted into a training set, a validation set, and a test set.4.2.1 Training, validation, and test sets

You train on the training data and evaluate your model on the validation data. Once your model is ready for prime time, you test it one final time on the test data.Why is the validation set necessary? The reason is that developing a model always involves tuning its configuration: for example, choosing the number of layers or the size of the layers(called the hyper-parameters of the model).

In essence, this tuning is a form of learning: a search for a good configuration in some parameter space.

You’ll end up with a model that performs artificially well on the validation data, so you need to use a completely different, never-before-seen dataset to evaluate the model: the test dataset.

Your model shouldn’t have had access to any information about the test set, even indirectly.

Let’s review three classic evaluation recipes,

- simple hold-out validation

#

num_validation_samples = 10000

# Shuffling the data is usually appropriate

np.random.shuffle(data)

# Defines the validation set

validation_data = data[:num_validation_samples]

# Defines the training set

data = data[num_validation_samples:]

training_data = data[:]

model = get_model()

model.train(training_data) # train the model on the training set

validation_score = model.evaluate(validation_data) # and evaluates it on the validation set

# At this point you can tune your model,

# retrain it, evaluate it, tune it again...

model = get_model()

# to train your final model from scratch on all non-test data available.

model.train( np.concatenate( [training_data, validation_data] ) )

test_score = model.evaluate(test_data)

4.3 Data preprocessing, feature engineering, and feature learning

4.3.1 Data preprocessing for neural networks

- vectorization you must first turn all inputs into tensors of integers, a step called data vectorization. For ex., to represent a sentence as a list of integer index of words.

- normalization Take relatively large values can trigger large gradient updates that will prevent the network from converging. To make learning easier for your network, your data should have the following characteristics:

- small ranges — Typically, most values should be in the 0 ~ 1 range.

- homogenous — That is, all features should take values in roughly the same range.

x -= x.mean(axis=0) # Normalize each feature independently to have a mean of 0

x /= x.std(axis=0) # Normalize each feature independently to have a standard deviation of 1.

4.3.2 Feature engineering

Feature engineering is the process of using your own knowledge about the input data and transform the input to be the data which can make the machine-learning algorithm work better by applying hardcoded (nonlearned) transformations to the data before it goes into the model.

4.4 Overfitting and underfitting

The fundamental issue in machine learning is the tension between optimization and generalization.

A model trained on more data will naturally generalize better.

If a network can only afford to memorize a small number of patterns, the optimization process will force it to focus on the most prominent patterns, which have a better chance of generalizing well.

4.4.1 Reducing the network’s size

Unfortunately, there is no magical formula to determine the right number of layers or the right size for each layer.The general workflow to find an appropriate model size is to start with relatively few layers and parameters, and increase the size of the layers or add new layers until you see diminishing returns with regard to validation loss.

4.4.2 Adding weight regularization

奥卡姆剃刀(英语:Occam's Razor, Ockham's Razor)是一個解決問題的法則. 同一個問題有許多種理論,若每一種都能做出同樣的預測,那麼應該挑選裡面假設最少的。

This idea also applies to the models learned by neural networks: given some training data and a network architecture, multiple sets of weight values (multiple models) could explain the data. Simpler models are less likely to overfit than complex ones.

A common way to mitigate overfitting is to put constraints on the complexity of a network by forcing its weights to take only small values, which makes the distribution of weight values more regular. This is called weight regularization, and it’s done by adding to the loss function of the network a cost associated with having large weights. This cost comes in two flavors:

- L1 regularization—The cost added is proportional to the absolute value of the weight coefficients (the L1 norm of the weights).

- L2 regularization—The cost added is proportional to the square of the value of the weight coefficients (the L2 norm of the weights).

from keras import regularizers

model = models.Sequential()

# Adding L2 weight regularization to the model

# l2(0.001) means every coefficient in the weight matrix of the layer will add

# 0.001 * weight_coefficient_value to the total loss of the network.

model.add(layers.Dense(16, kernel_regularizer=regularizers.l2(0.001), activation='relu', input_shape=(10000,)))

model.add(layers.Dense(16, kernel_regularizer=regularizers.l2(0.001), activation='relu'))

model.add(layers.Dense(1, activation='sigmoid'))

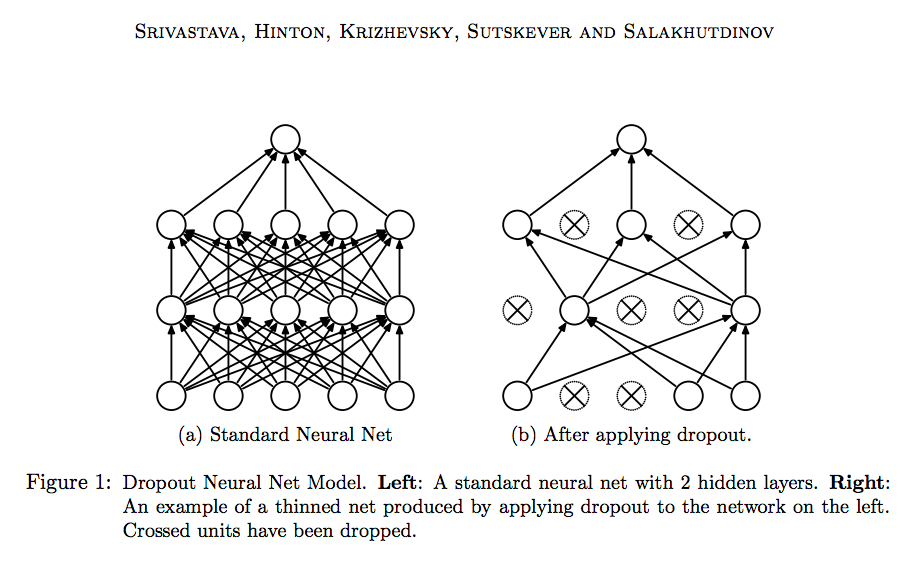

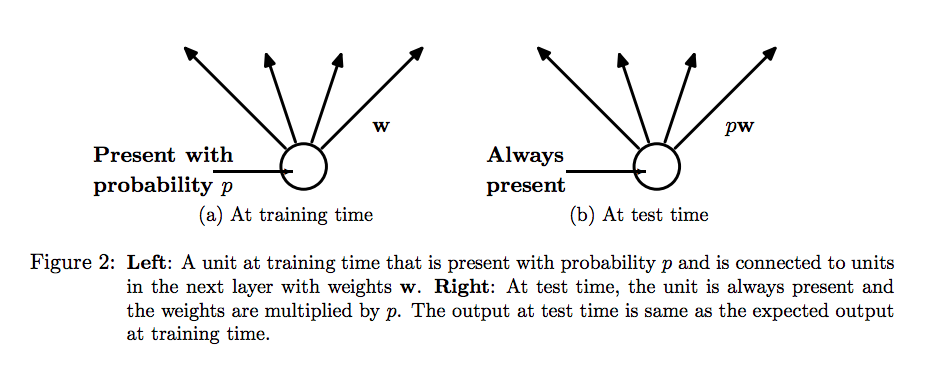

4.4.3 Adding dropout

Dropout, applied to a layer, consists of randomly dropping out a number of output features(setting weight coefficients to zero) of the layer during training.

In Keras, you can introduce dropout in a network via the Dropout layer, which is applied to the output of the layer right before it:

model.add(layers.Dropout(0.5))

4.5 The universal workflow of machine learning

4.5.1 Defining the problem and assembling a dataset

- You can only learn to predict something if you have available training data.

- Identifying the problem's classification type(binary, multi-class, regression, etc) will guide your choice of model architecture, loss function, and so on.

- Make sure your outputs can be predicted given your inputs.

4.5.2 Choosing a measure of success

Your metric for success will guide the choice of a loss function: what your model will optimize.

4.5.3 Deciding on an evaluation protocol

You must establish how you’ll measure your current progress during the training.

4.5.4 Preparing your data

You should format your data in a way that can be fed into a machine-learning model.

4.5.5 Developing a model that does better than a baseline

Choosing the right last-layer activation and loss function for your model:

| Problem type | Last-layer activation | Loss function |

|---|---|---|

| Binary classification | sigmoid | binary_crossentropy |

| Multiclass, single-label classification | softmax | categorical_crossentropy |

| Multiclass, multilabel classification | sigmoid | binary_crossentropy |

| Regression to arbitrary values | None | mse |

| Regression to values between 0 and 1 | sigmoid | mse or binary_crossentropy |

4.5.6 Scaling up: developing a model that overfits

Always monitor the training loss and validation loss, as well as the training and validation values for any metrics you care about. When you see that the model’s performance on the validation data begins to degrade, you’ve achieved overfitting.

4.5.7

Regularizing your model and tuning your hyperparameters

PART 2 Deep Learning in Practice

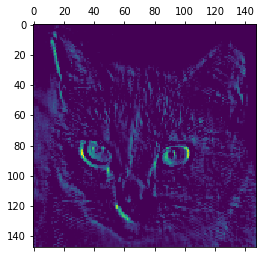

5 Deep learning for computer vision

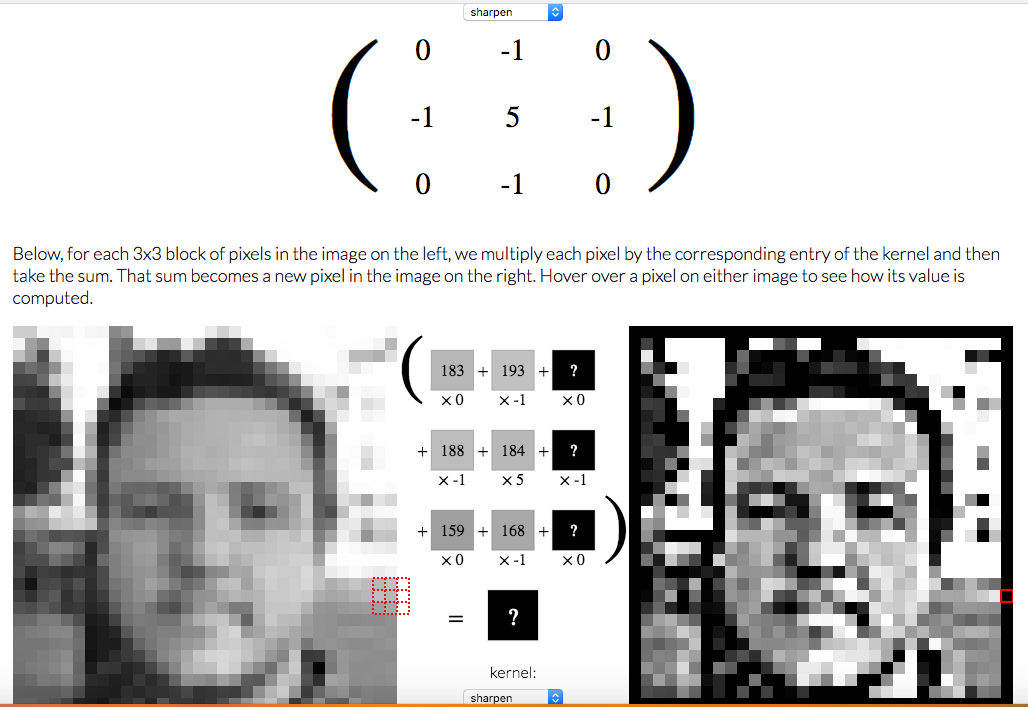

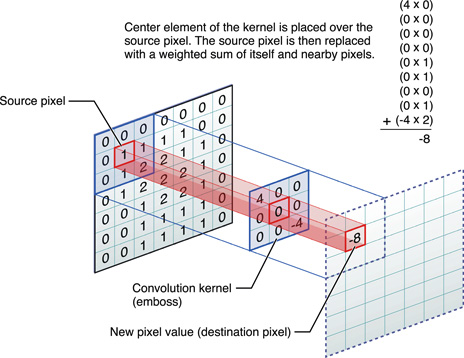

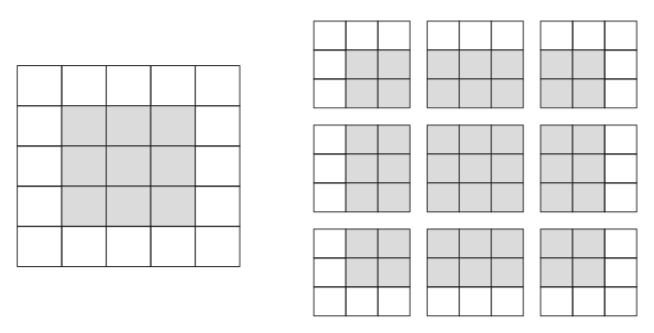

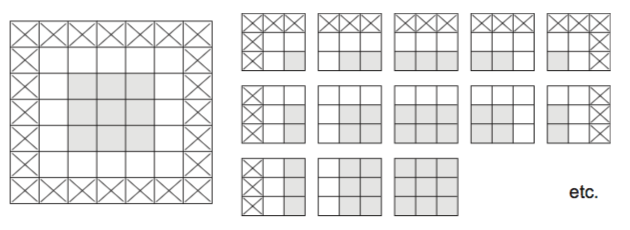

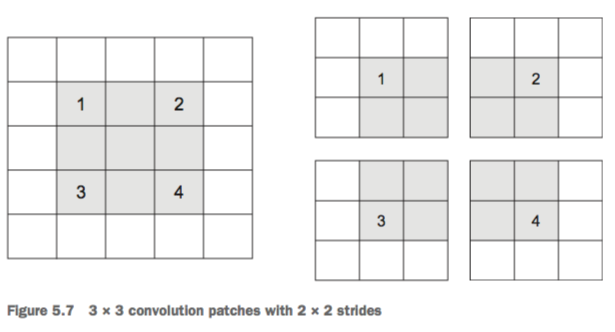

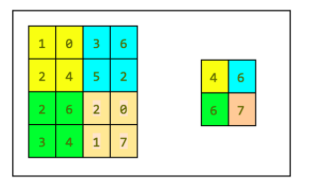

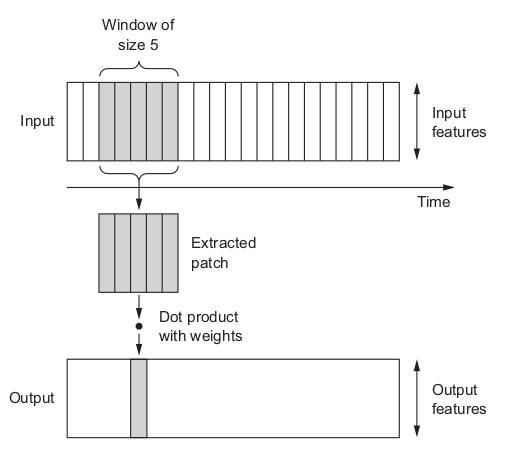

Convolution is the process of adding each element of the image to its local neighbors, weighted by the kernel.

The output of image convolution is calculated as follows:

- Place the kernel anchor on top of a determined pixel, with the rest of the kernel overlaying the corresponding local pixels in the image.

- Multiply the kernel coefficients by the corresponding image pixel values and sum the result.

- Place the result to the location of the anchor in the input image.

- Repeat the process for all pixels by scanning the kernel over the entire image.

An image kernel is a small matrix used in machine learning for feature extraction, a technique for determining the most important portions of an image.

The process is referred to more generally as "convolution".

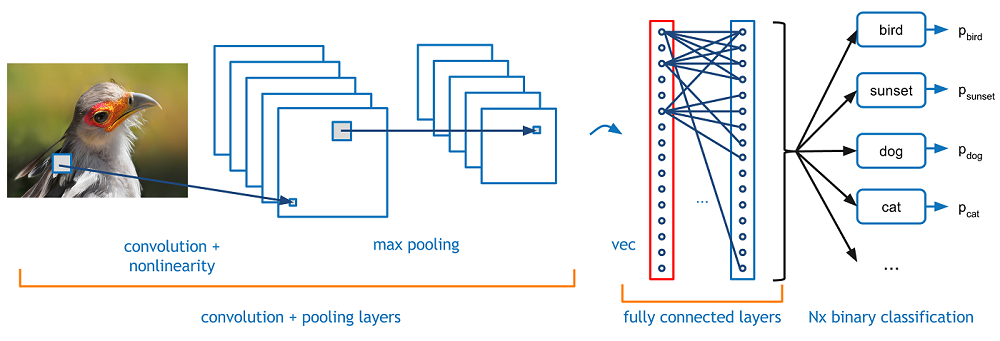

The convolutional neural networks is also known as convnets.

Convolutional neural networks (CNNs) are the current state-of-the-art model architecture for image classification tasks.

CNNs are very similar to ordinary Neural Networks from the previous chapter: they are made up of neurons that have learnable weights and biases.

The difference is that ConvNet architectures make the explicit assumption that the inputs are images.

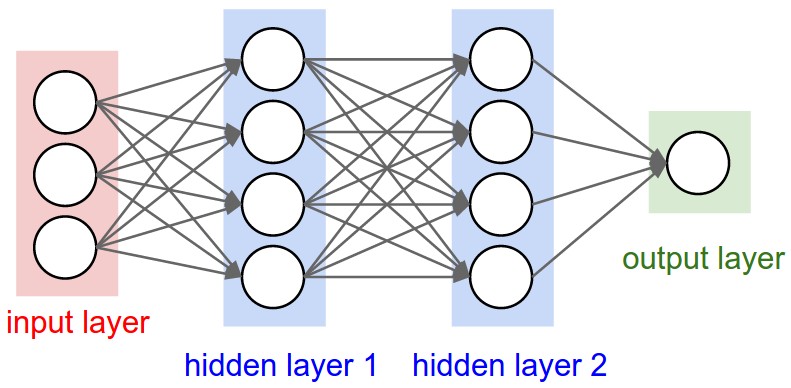

Neural Networks receive an input (a single vector), and transform it through a series of hidden layers. Each hidden layer is made up of a set of neurons, where each neuron is fully connected to all neurons in the previous layer, and where neurons in a single layer function completely independently and do not share any connections. The last fully-connected layer is called the “output layer” and in classification settings it represents the class scores.

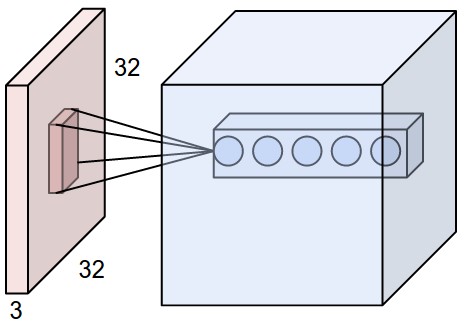

Regular Neural Nets don’t scale well to full images. (Jerry: it's because the input is arranged in 1 dimensional array )

For images are of size 32x32x3 (32 wide, 32 high, 3 color channels), a single fully-connected neuron in a first hidden layer of a regular Neural Network would have 32*32*3 = 3072 weights. More neurons need more parameters. Clearly, this full connectivity is wasteful and the huge number of parameters would quickly lead to overfitting.

Unlike a regular Neural Network, the layers of a ConvNet have neurons arranged in 3 dimensions: width, height, depth.

Every layer of a ConvNet transforms the 3D input volume to a 3D output volume of neuron activations.

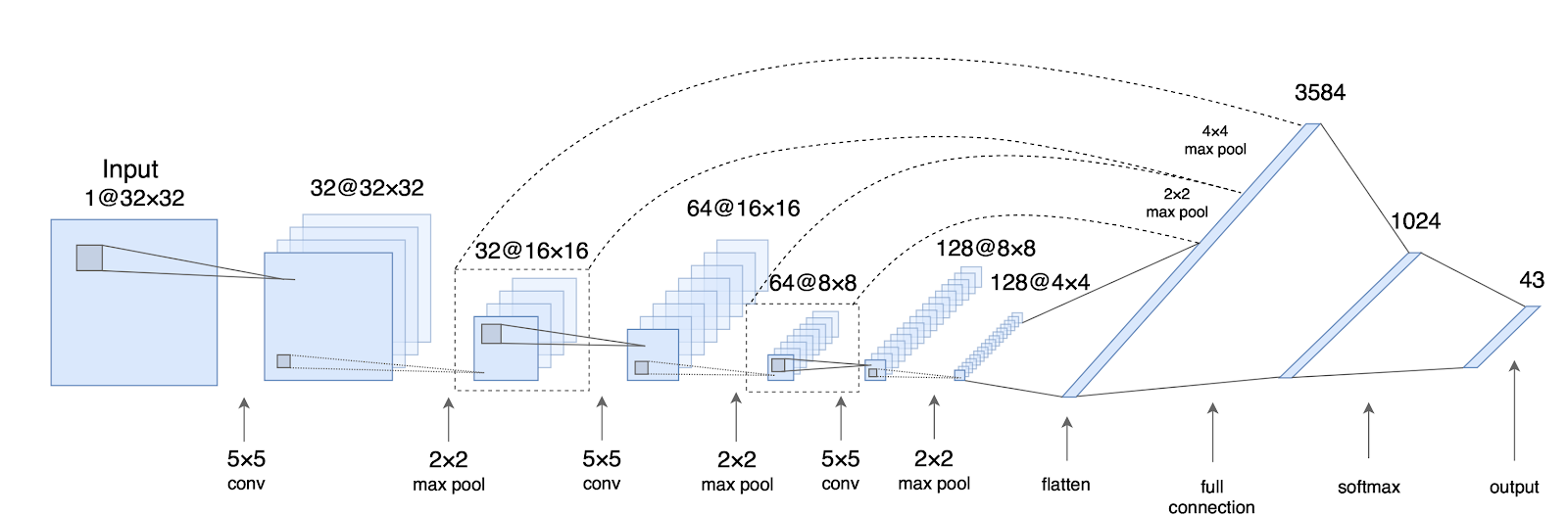

A simple ConvNet for CIFAR-10 dataset( 60000 32x32 colour images in 10 classes ) classification could have the architecture:

- INPUT [32x32x3] will hold the raw pixel values of the image, in this case an image of width 32, height 32, and with three color channels R,G,B.

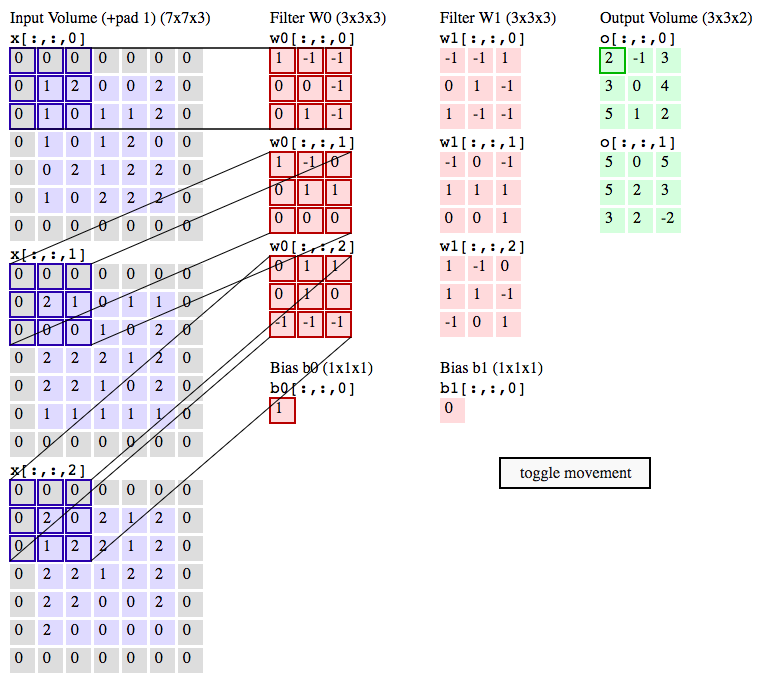

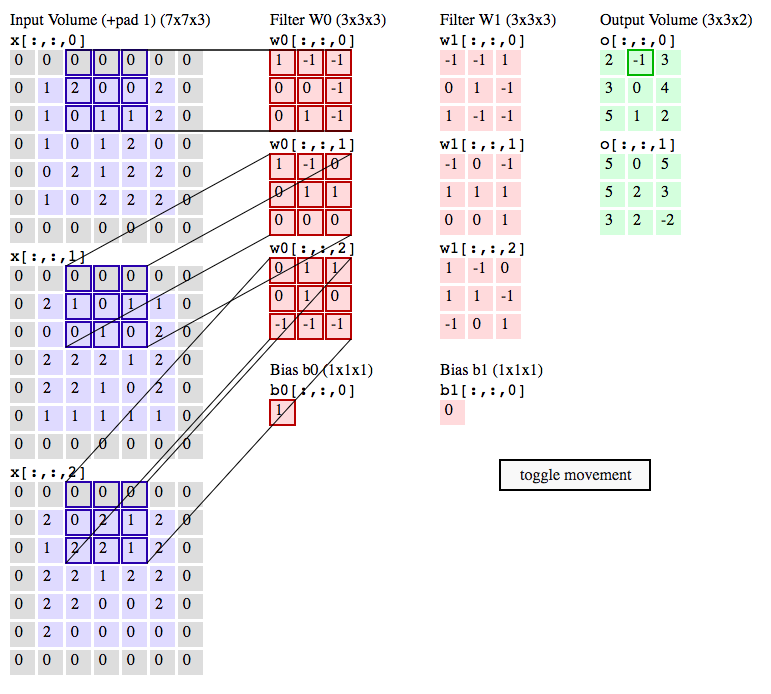

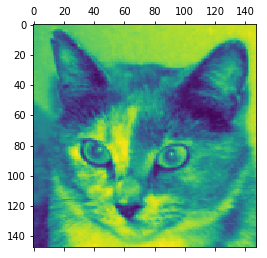

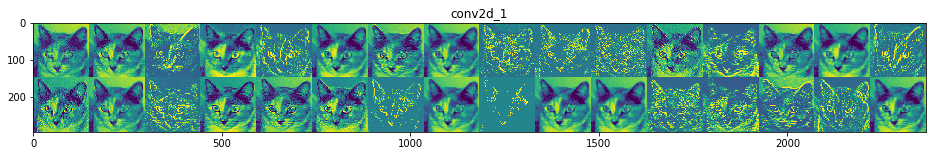

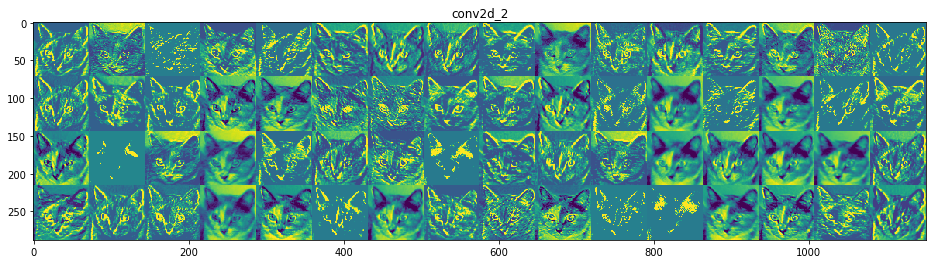

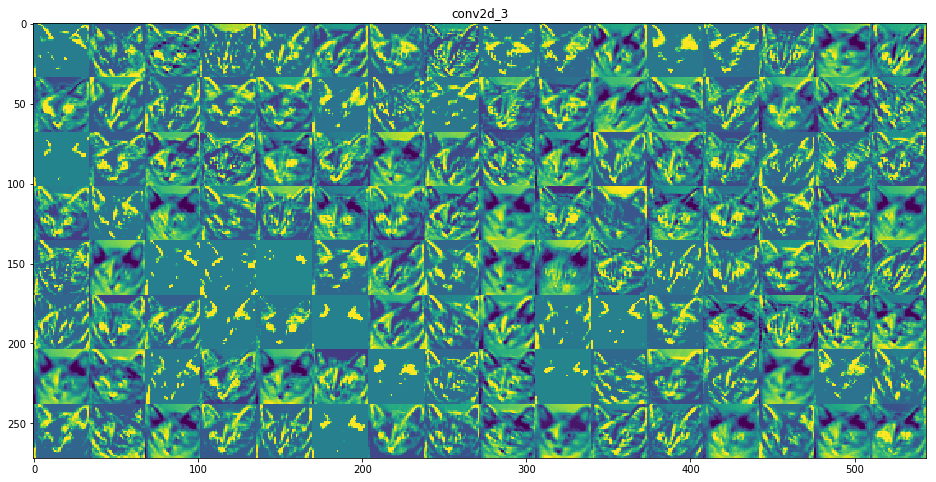

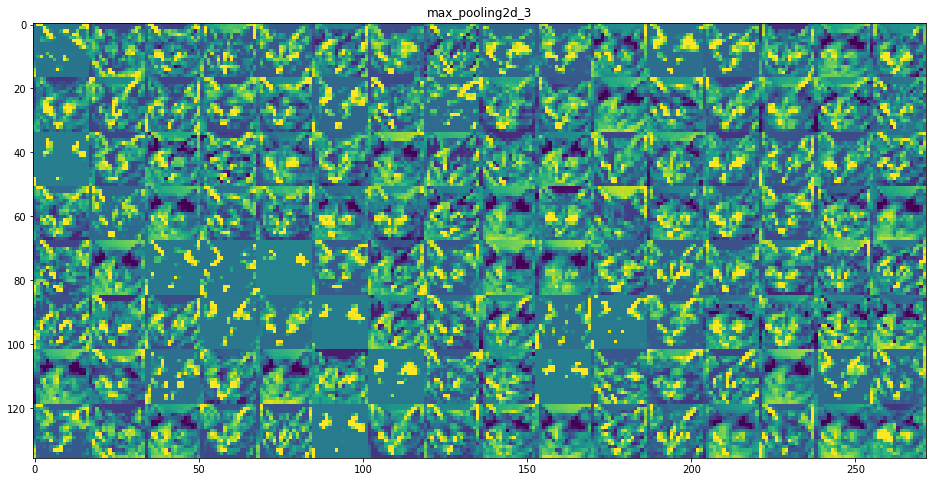

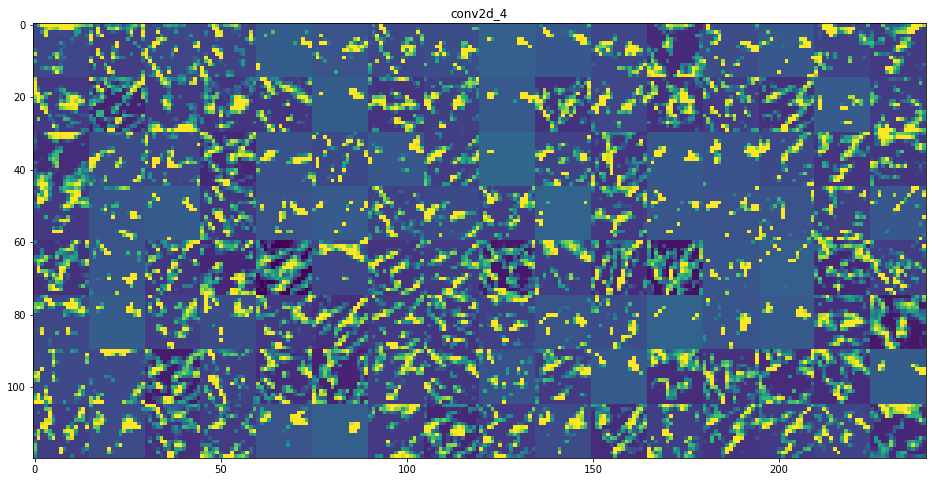

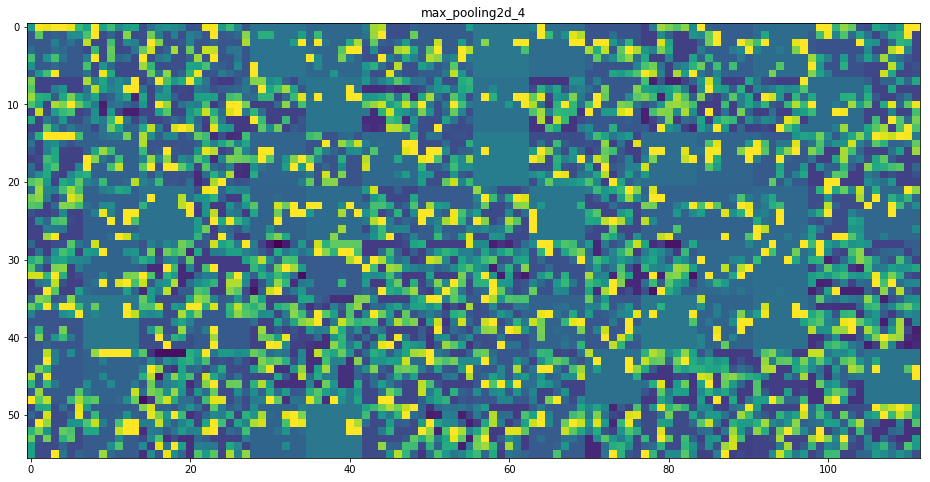

- CONV layer will compute the output of neurons that are connected to local regions in the input, each computing a dot product between their weights and a small region they are connected to in the input volume. This may result in volume such as [32x32x12] if we decided to use 12 filters.(extract out 12 features)

- RELU layer will apply an element-wise activation function, such as the max(0,x)max(0,x) thresholding at zero. This leaves the size of the volume unchanged ([32x32x12]).

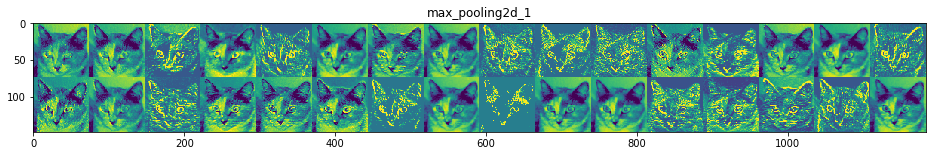

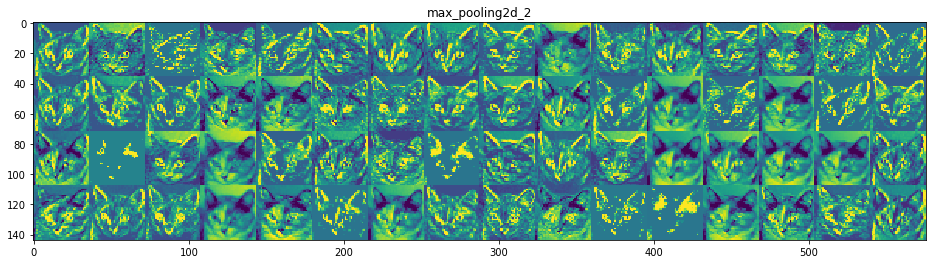

- POOL layer will perform a downsampling operation along the spatial dimensions (width, height), resulting in volume such as [16x16x12].

- FC (i.e. fully-connected) layer will compute the class scores, resulting in volume of size [1x1x10], where each of the 10 numbers correspond to a class score, such as among the 10 categories of CIFAR-10. As with ordinary Neural Networks and as the name implies, each neuron in this layer will be connected to all the numbers in the previous volume.

The parameters in the CONV/FC layers will be trained with gradient descent so that the class scores that the ConvNet computes are consistent with the labels in the training set for each image.

The Conv layer is the core building block of a Convolutional Network that does most of the computational heavy lifting.

When training a convnet, we don’t know what the values for our kernels and therefore have to figure them out by learning them.

The CONV layer’s parameters consist of a set of learnable filters. Every filter is small spatially (along width and height), but extends through the full depth of the input volume.